package io.micronaut.http.server.netty.websocket;

import io.micronaut.websocket.WebSocketBroadcaster;

import io.micronaut.websocket.WebSocketSession;

import io.micronaut.websocket.annotation.*;

import java.util.function.Predicate;

@ServerWebSocket("/chat/{topic}/{username}") (1)

public class ChatServerWebSocket {

private WebSocketBroadcaster broadcaster;

public ChatServerWebSocket(WebSocketBroadcaster broadcaster) {

this.broadcaster = broadcaster;

}

@OnOpen (2)

public void onOpen(String topic, String username, WebSocketSession session) {

String msg = "[" + username + "] Joined!";

broadcaster.broadcastSync(msg, isValid(topic, session));

}

@OnMessage (3)

public void onMessage(

String topic,

String username,

String message,

WebSocketSession session) {

String msg = "[" + username + "] " + message;

broadcaster.broadcastSync(msg, isValid(topic, session)); (4)

}

@OnClose (5)

public void onClose(

String topic,

String username,

WebSocketSession session) {

String msg = "[" + username + "] Disconnected!";

broadcaster.broadcastSync(msg, isValid(topic, session));

}

private Predicate<WebSocketSession> isValid(String topic, WebSocketSession session) {

return s -> s != session && topic.equalsIgnoreCase(s.getUriVariables().get("topic", String.class, null));

}

}Table of Contents

Micronaut

Natively Cloud Native

Version: 1.0.0

1 Introduction

Micronaut is a modern, JVM-based, full stack microservices framework designed for building modular, easily testable microservice applications.

Micronaut is developed by the creators of the Grails framework and takes inspiration from lessons learnt over the years building real-world applications from monoliths to microservices using Spring, Spring Boot and Grails.

Micronaut aims to provide all the tools necessary to build full-featured microservice applications, including:

-

Dependency Injection and Inversion of Control (IoC)

-

Sensible Defaults and Auto-Configuration

-

Configuration and Configuration Sharing

-

Service Discovery

-

HTTP Routing

-

HTTP Client with client-side load-balancing

At the same time Micronaut aims to avoid the downsides of frameworks like Spring, Spring Boot and Grails by providing:

-

Fast startup time

-

Reduced memory footprint

-

Minimal use of reflection

-

Minimal use of proxies

-

Easy unit testing

Historically, frameworks such as Spring and Grails were not designed to run in scenarios such as server-less functions, Android apps, or low memory-footprint microservices. In contrast, Micronaut is designed to be suitable for all of these scenarios.

This goal is achieved through the use of Java’s annotation processors, which are usable on any JVM language that supports them, as well as an HTTP Server and Client built on Netty. In order to provide a similar programming model to Spring and Grails, these annotation processors precompile the necessary metadata in order to perform DI, define AOP proxies and configure your application to run in a microservices environment.

Many of the APIs within Micronaut are heavily inspired by Spring and Grails. This is by design, and aids in bringing developers up to speed quickly.

1.1 What's New?

Apart from issues resolved and minor enhancements since the last release of Micronaut, this section covers significant new features.

Improvements from RC3 to GA

GraalVM support has been updated to use Docker instead of a ./build-native-image script allowing usage of other platforms.

The official GraalVM Docker images are used, so to build a native image you can simply execute docker build . -t myimage in the root of your project.

Support for GraalVM Native Images

Micronaut now includes experimental support for compiling down to GraalVM native images using the nativeimage tool shipped as part of Graal (1.0.0 RC6 and above).

This is possible due to to Micronaut’s reflection-free approach to Dependency Injection and AOP.

See the section on GraalVM support in the user guide for more information.

Swagger / OpenAPI Documentation Support

Micronaut now includes the ability to generate Swagger (OpenAPI) YAML at compile time using the language neutral visitor API and the interfaces defined by the io.micronaut.inject.ast package.

See the section on OpenAPI / Swagger Support in the user guide for more information.

Native WebSocket Support

Built-in support for WebSocket for both the client and the server has been added. The following example is a simple server chat implementation:

See the section on WebSocket Support in the user guide for more information.

CLI Commands for WebSockets

The Micronaut CLI now includes two new commands for generating WebSocket clients and servers.

$ mn create-websocket-server MyChat

| Rendered template WebsocketServer.java to destination src/main/java/example/MyChatServer.java

$ mn create-websocket-client MyChat

| Rendered template WebsocketClient.java to destination src/main/java/example/MyChatClient.javaCompilation Time Validation

The validation module can now be added to the annotationProcessor classpath and which will result in additional compile time checks, ensuring that users are using the framework correctly. For example, the following route method:

@Get("/hello/{name}")

public Single<String> hello(@NotBlank String na) {

return Single.just("Hello " + na + "!");

}Will produce the following error at compile time:

hello-world-java/src/main/java/example/HelloController.java:34: error: The route declares a uri variable named [name], but no corresponding method argument is present

public Single<String> hello(@NotBlank String na) {

^This lessens the need for IDE support designed specifically for Micronaut.

Experimental JMX Support for Endpoints

Experimental support for exposing management endpoints over JMX has been added via the jmx module. See the section on JMX Support for more information on how to use this feaure.

Multitenancy support

Latest release includes Multitenancy integration into the framework. Features includes tenant resolution, propagation and integration with GORM which supports discriminator, table and schema multitenancy modes.

Token Propagation

Latest release includes Token Propagation capabilities into the security module of the the framework. It enables the propagation of valid tokens to outgoing requests triggered by the original request in a transparent way.

Ldap Authentication

Latest release supports authentication with LDAP out of the box. Moreover, the LDAP authentication in Micronaut supports configuration of one or more LDAP servers to authenticate with.

Documentation Improvements

The documentation you are reading has been improved with a new configuration reference button at the top that contains a reference produced at compile time of all the available configuration options in Micronaut.

Dependency Upgrades

The following dependency upgrades occurred in this release:

-

Netty

4.1.29→4.1.30 -

RxJava

2.2.0→2.2.2 -

Hibernate Core

5.3.4→5.3.6.Final -

Jackson

2.9.6→2.9.7 -

Reactor

3.1.8→3.2.0 -

SnakeYAML

1.20→1.23 -

Jaeger

0.30.4→0.31.0 -

Brave '5.2.0` →

5.4.2 -

Zipkin Reporter

2.7.7→2.7.9 -

Spring

5.0.8→5.1.0

Amazon Route 53 Service Discovery and AWS Systems Manager Parameter Store Support

Use Amazon Route 53 Service Discovery directly for service discovery instead of running an instance of tools like Consul. You can also use AWS Systems Manager Parameter Store for shared configuration between nodes.

2 Quick Start

The following sections will walk you through a Quick start on how to use Micronaut to setup a basic "Hello World" application.

Before getting started ensure you have a Java 8 or above SDK installed and it is recommended having a suitable IDE such as IntelliJ IDEA.

To follow the Quick Start it is also recommended that you have the Micronaut CLI installed.

2.1 Build/Install the CLI

The best way to install Micronaut on Unix systems is with SDKMAN which greatly simplifies installing and managing multiple Micronaut versions.

2.1.1 Install with Sdkman

Before updating make sure you have latest version of SDKMAN installed. If not, run

$ sdk updateIn order to install Micronaut, run following command:

$ sdk install micronautYou can also specify the version to the sdk install command.

$ sdk install micronaut 1.0.0You can find more information about SDKMAN usage on the SDKMAN Docs

You should now be able to run the Micronaut CLI.

$ mn

| Starting interactive mode...

| Enter a command name to run. Use TAB for completion:

mn>2.1.2 Install through Binary on Windows

-

Download the latest binary from Micronaut Website

-

Extract the binary to appropriate location (For example:

C:/micronaut) -

Create an environment variable

MICRONAUT_HOMEwhich points to the installation directory i.e.C:/micronaut -

Update the

PATHenvironment variable, append%MICRONAUT_HOME%\bin.

You should now be able to run the Micronaut CLI from the command prompt as follows:

$ mn

| Starting interactive mode...

| Enter a command name to run. Use TAB for completion:

mn>2.1.3 Building from Source

Clone the repository:

$ git clone https://github.com/micronaut-projects/micronaut-core.gitcd into the micronaut-core directory and run the following command:

$ ./gradlew cli:fatJarThis will create the farJar for CLI.

In your shell profile (~/.bash_profile if you are using the Bash shell), export the MICRONAUT_HOME directory and add the CLI path to your PATH:

export MICRONAUT_HOME=~/path/to/micronaut-core

export PATH="$PATH:$MICRONAUT_HOME/cli/build/bin"Reload your terminal or source your shell profile with source:

> source ~/.bash_profileYou should now be able to run the Micronaut CLI.

$ mn

| Starting interactive mode...

| Enter a command name to run. Use TAB for completion:

mn>

You can also point SDKMAN to local installation for dev purpose using following command sdk install micronaut dev /path/to/checkout/cli/build

|

2.2 Creating a Server Application

Although not required to use Micronaut, the Micronaut CLI is the quickest way to create a new server application.

Using the CLI you can create a new Micronaut application in either Groovy, Java or Kotlin (the default is Java).

The following command creates a new "Hello World" server application in Java with a Gradle build:

$ mn create-app hello-world

You can supply --build maven if you wish to create a Maven based build instead

|

The previous command will create a new Java application in a directory called hello-world featuring a Gradle a build. The application can be run with ./gradlew run:

$ ./gradlew run

> Task :run

[main] INFO io.micronaut.runtime.Micronaut - Startup completed in 972ms. Server Running: http://localhost:28933By default the Micronaut HTTP server is configured to run on port 8080.

See the section Running Server on a Specific Port in the user guide for more options.

In order to create a service that responds to "Hello World" you first need a controller. The following is an example of a controller written in Java and located in src/main/java/example/helloworld:

import io.micronaut.http.MediaType;

import io.micronaut.http.annotation.*;

@Controller("/hello") (1)

public class HelloController {

@Get(produces = MediaType.TEXT_PLAIN) (2)

public String index() {

return "Hello World"; (3)

}

}| 1 | The class is defined as a controller with the @Controller annotation mapped to the path /hello |

| 2 | The @Get annotation is used to map the index method to all requests that use an HTTP GET |

| 3 | A String "Hello World" is returned as the result |

If you start the application and send a request to the /hello URI then the text "Hello World" is returned:

$ curl http://localhost:8080/hello

Hello World2.3 Setting up an IDE

The application created in the previous section contains a "main class" located in src/main/java that looks like the following:

package hello.world;

import io.micronaut.runtime.Micronaut;

public class Application {

public static void main(String[] args) {

Micronaut.run(Application.class);

}

}This is the class that is run when running the application via Gradle or via deployment. You can also run the main class directly within your IDE if it is configured correctly.

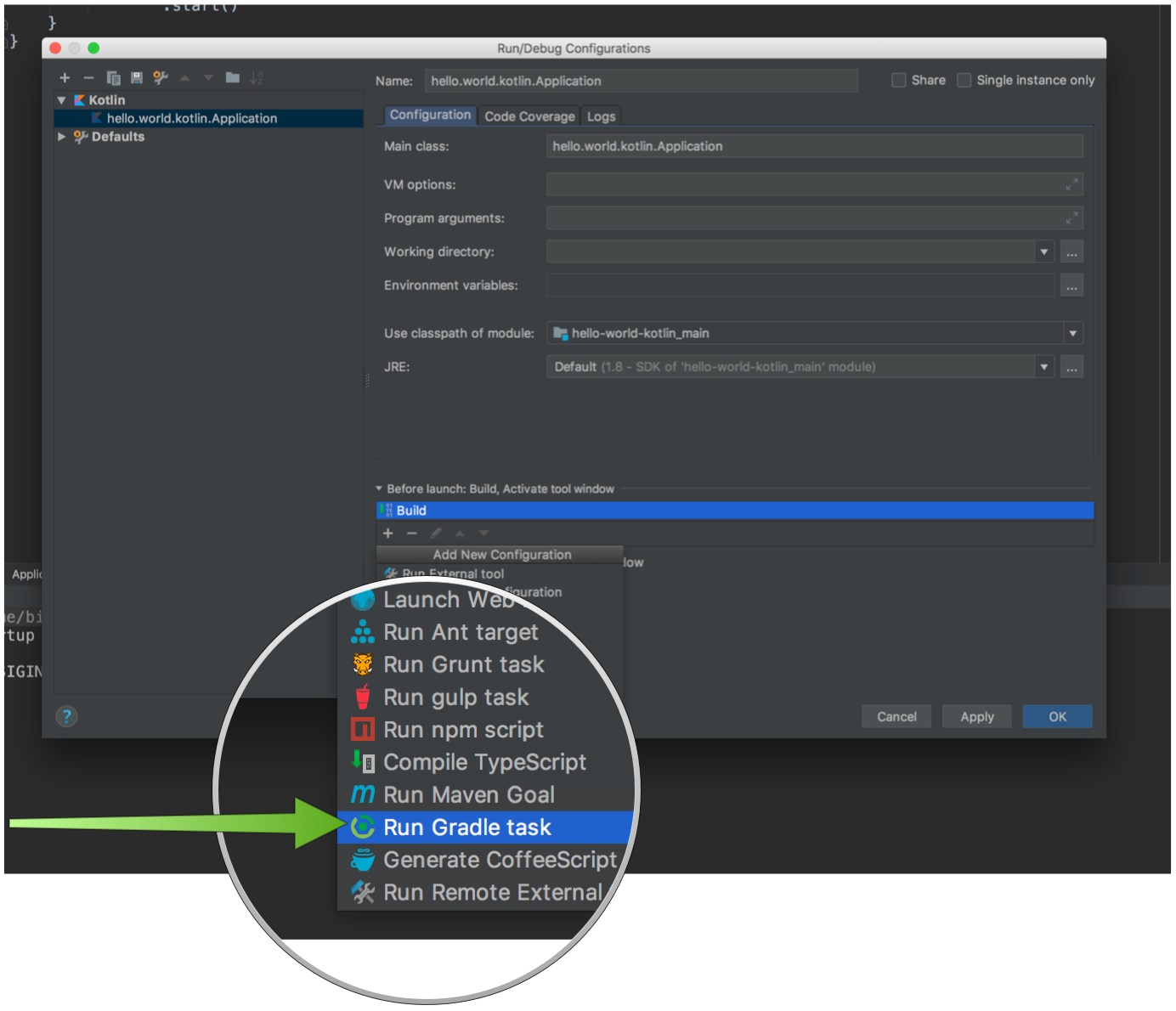

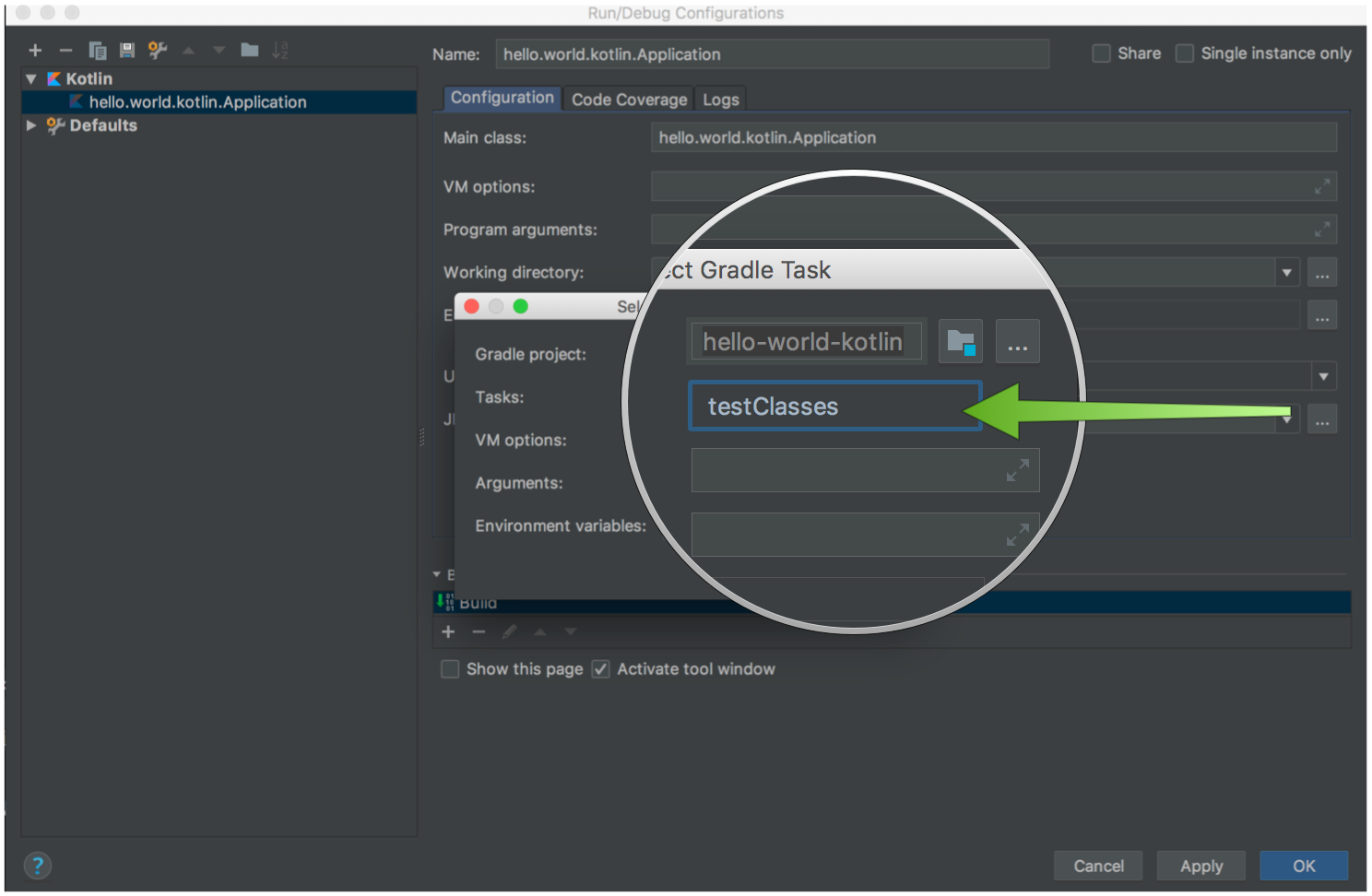

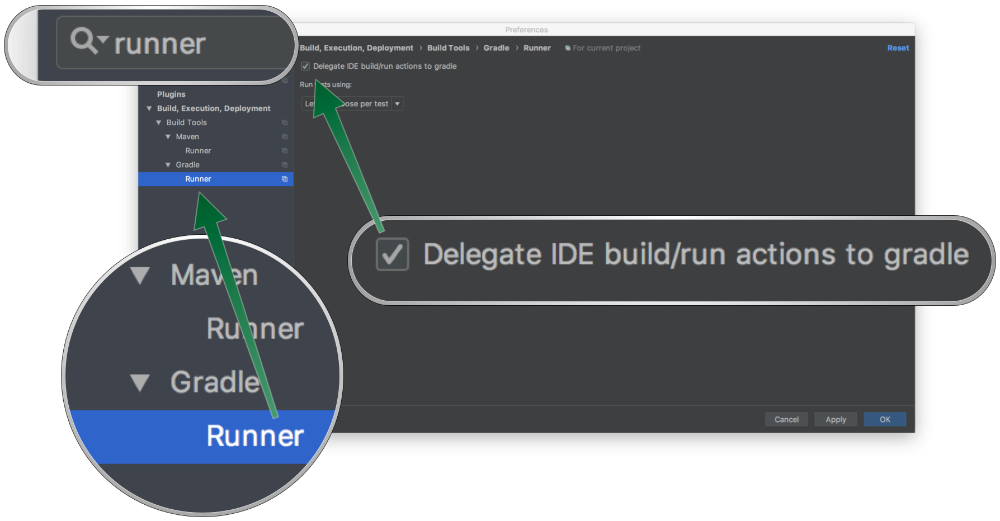

Configuring IntelliJ IDEA

To import a Micronaut project into IntelliJ IDEA simply open the build.gradle or pom.xml file and follow the instructions to import the project.

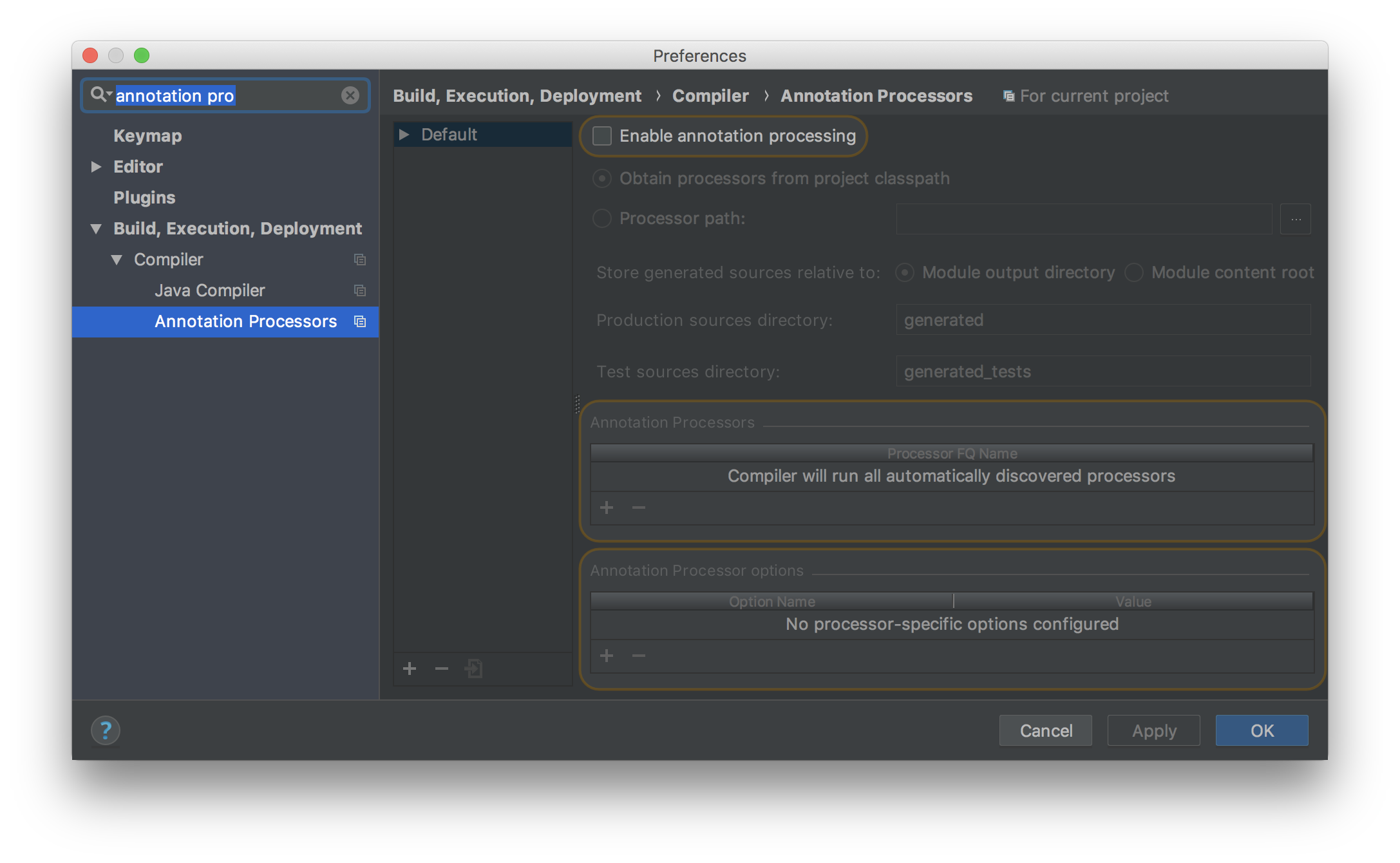

For IntelliJ IDEA if you plan to use the IntelliJ compiler then you should enable annotation processing under the "Build, Execution, Deployment → Compiler → Annotation Processors" by ticking the "Enable annotation processing" checkbox:

Once you have enabled annotation processing in IntelliJ you can run the application and tests directly within the IDE without the need of an external build tool such as Gradle or Maven.

Configuring Eclipse IDE

If you wish to use Eclipse IDE, it is recommended you import your Micronaut project into Eclipse using either Gradle BuildShip for Gradle or M2Eclipse for Maven.

As of this writing, the latest stable version of Eclipse has incomplete support for Java annotation processors, this has been resolved in Eclipse 4.9 M2 and above, which you will need to download.

Eclipse and Gradle

Once you have setup Eclipse 4.9 M2 or above with Gradle BuildShip first run the gradle eclipse task from the root of your project then import the project by selecting File → Import then choosing Gradle → Existing Gradle Project and navigating to the root directory of your project (where the build.gradle is located).

Eclipse and Maven

For Eclipse 4.9 M2 and above with Maven you need the following Eclipse plugins:

Once installed you need to import the project by selecting File → Import then choosing Maven → Existing Maven Project and navigating to the root directory of your project (where the pom.xml is located).

You should then enable annotation processing by opening Eclipse → Preferences and navigating to Maven → Annotation Processing and selecting the option Automatically configure JDT APT.

2.4 Creating a Client

As mentioned previously, Micronaut includes both an HTTP server and an HTTP client. A low-level HTTP client is provided out of the box which you can use to test the HelloController created in the previous section.

For example, the following test is written using Spock Framework:

import io.micronaut.context.ApplicationContext

import io.micronaut.http.HttpRequest

import io.micronaut.http.client.HttpClient

import io.micronaut.runtime.server.EmbeddedServer

import spock.lang.*

class HelloControllerSpec extends Specification {

@Shared @AutoCleanup EmbeddedServer embeddedServer =

ApplicationContext.run(EmbeddedServer) (1)

@Shared @AutoCleanup HttpClient client = HttpClient.create(embeddedServer.URL) (2)

void "test hello world response"() {

expect:

client.toBlocking() (3)

.retrieve(HttpRequest.GET('/hello')) == "Hello World" (4)

}

}| 1 | The EmbeddedServer is configured as a shared and automatically cleaned up test field |

| 2 | A HttpClient instance shared field is also defined |

| 3 | The test using the toBlocking() method to make a blocking call |

| 4 | The retrieve method returns the response of the controller as a String |

In addition to a low-level client, Micronaut features a declarative, compile-time HTTP client, powered by the Client annotation.

To create a client, simply create an interface annotated with @Client. For example:

import io.micronaut.http.annotation.Get;

import io.micronaut.http.client.annotation.Client;

import io.reactivex.Single;

@Client("/hello") (1)

public interface HelloClient {

@Get (2)

Single<String> hello(); (3)

}| 1 | The @Client annotation is used with value that is a relative path to the current server |

| 2 | The same @Get annotation used on the server is used to define the client mapping |

| 3 | A RxJava Single is returned with the value read from the server |

To test the HelloClient simply retrieve it from the ApplicationContext associated with the server:

import io.micronaut.runtime.server.EmbeddedServer

import spock.lang.*

class HelloClientSpec extends Specification {

@Shared @AutoCleanup EmbeddedServer embeddedServer =

ApplicationContext.run(EmbeddedServer) (1)

@Shared HelloClient client = embeddedServer

.applicationContext

.getBean(HelloClient) (2)

void "test hello world response"() {

expect:

client.hello().blockingGet() == "Hello World" (3)

}

}| 1 | The EmbeddedServer is run |

| 2 | The HelloClient is retrieved from the ApplicationContext |

| 3 | The client is invoked using RxJava’s blockingGet method |

The Client annotation produces an implementation automatically for you at compile time without the need to use proxies or runtime reflection.

The Client annotation is very flexible. See the section on the Micronaut HTTP Client for more information.

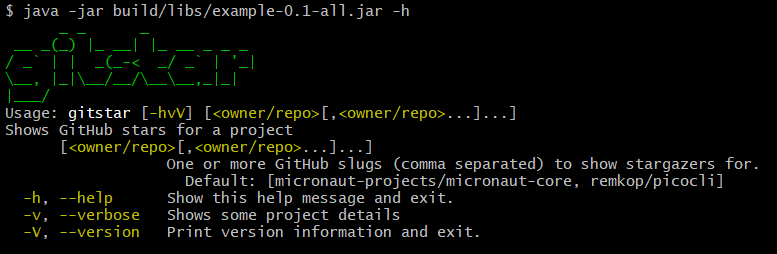

2.5 Deploying the Application

To deploy a Micronaut application you create a runnable JAR file by running ./gradlew assemble or ./mvnw package.

The constructed JAR file can then be executed with java -jar. For example:

$ java -jar build/libs/hello-world-all.jarThe runnable JAR can also easily be packaged within a Docker container or deployed to any Cloud infrastructure that supports runnable JAR files.

3 Inversion of Control

When most developers think of Inversion of Control (also known as Dependency Injection and referred to as such from this point onwards) the Spring Framework comes to mind.

Micronaut takes heavy inspiration from Spring, and in fact, the core developers of Micronaut are former SpringSource/Pivotal engineers now working for OCI.

Unlike Spring which relies exclusively on runtime reflection and proxies, Micronaut, on the other hand, uses compile time data to implement dependency injection.

This is a similar approach taken by tools such as Google’s Dagger, which is designed primarily with Android in mind. Micronaut, on the other hand, is designed for building server-side microservices and provides many of the same tools and utilities as Spring does but without using reflection or caching excessive amounts of reflection metadata.

The goals of the Micronaut IoC container are summarized as:

-

Use reflection as a last resort

-

Avoid proxies

-

Optimize start-up time

-

Reduce memory footprint

-

Provide clear, understandable error handling

Note that the IoC part of Micronaut can be used completely independently of Micronaut itself for whatever application type you may wish to build. To do so all you need to do is configure your build appropriately to include the micronaut-inject-java dependency as an annotation processor. For example with Gradle:

plugins {

id "net.ltgt.apt" version "0.18" // <1>

}

...

dependencies {

annotationProcessor "io.micronaut:micronaut-inject-java:1.0.0" // <2>

compile "io.micronaut:micronaut-inject:1.0.0"

...

}| 1 | Apply the Annotation Processing plugin |

| 2 | Include the minimal dependencies required to perform dependency injection |

For the Groovy language you should include micronaut-inject-groovy in the compileOnly scope.

|

The entry point for IoC is then the ApplicationContext interface, which includes a run method. The following example demonstrates using it:

ApplicationContexttry (ApplicationContext context = ApplicationContext.run()) { (1)

MyBean myBean = context.getBean(MyBean.class); (2)

// do something with your bean

}| 1 | Run the ApplicationContext |

| 2 | Retrieve a bean that has been dependency injected |

| The example uses Java’s try-with-resources syntax to ensure the ApplicationContext is cleanly shutdown when the application exits. |

3.1 Defining Beans

Micronaut implements the JSR-330 (javax.inject) - Dependency Injection for Java specification hence to use Micronaut you simply use the annotations provided by javax.inject.

The following is a simple example:

import javax.inject.*

interface Engine { (1)

int getCylinders()

String start()

}

@Singleton (2)

class V8Engine implements Engine {

int cylinders = 8

String start() {

"Starting V8"

}

}

@Singleton

class Vehicle {

final Engine engine

Vehicle(Engine engine) { (3)

this.engine = engine

}

String start() {

engine.start()

}

}| 1 | A common Engine interface is defined |

| 2 | A V8Engine implementation is defined and marked with Singleton scope |

| 3 | The Engine is injected via constructor injection |

To perform dependency injection simply run the BeanContext using the run() method and lookup a bean using getBean(Class), as per the following example:

import io.micronaut.context.*

...

Vehicle vehicle = BeanContext.run()

.getBean(Vehicle)

println( vehicle.start() )Micronaut will automatically discover dependency injection metadata on the classpath and wire the beans together according to injection points you define.

Micronaut supports the following types of dependency injection:

-

Constructor injection (must be one public constructor or a single contructor annotated with

@Inject) -

Field injection

-

JavaBean property injection

-

Method parameter injection

3.2 How Does it Work?

At this point, you may be wondering how Micronaut performs the above dependency injection without requiring reflection.

The key is a set of AST transformations (for Groovy) and annotation processors (for Java) that generate classes that implement the BeanDefinition interface.

The ASM byte-code library is used to generate classes and because Micronaut knows ahead of time the injection points, there is no need to scan all of the methods, fields, constructors, etc. at runtime like other frameworks such as Spring do.

Also since reflection is not used in the construction of the bean, the JVM can inline and optimize the code far better resulting in better runtime performance and reduced memory consumption. This is particularly important for non-singleton scopes where the application performance depends on bean creation performance.

In addition, with Micronaut your application startup time and memory consumption is not bound to the size of your codebase in the same way as a framework that uses reflection. Reflection based IoC frameworks load and cache reflection data for every single field, method, and constructor in your code. Thus as your code grows in size so do your memory requirements, whilst with Micronaut this is not the case.

3.3 The BeanContext

The BeanContext is a container object for all your bean definitions (it also implements BeanDefinitionRegistry).

It is also the point of initialization for Micronaut. Generally speaking however, you don’t have to interact directly with the BeanContext API and can simply use javax.inject annotations and the annotations defined within io.micronaut.context.annotation package for your dependency injection needs.

3.4 Injectable Container Types

In addition to being able to inject beans Micronaut natively supports injecting the following types:

| Type | Description | Example |

|---|---|---|

An |

|

|

An |

|

|

A lazy |

|

|

A native array of beans of a given type |

|

|

A |

|

3.5 Bean Qualifiers

If you have multiple possible implementations for a given interface that you want to inject, you need to use a qualifier.

Once again Micronaut leverages JSR-330 and the Qualifier and Named annotations to support this use case.

Qualifying By Name

To qualify by name you can use the Named annotation. For example, consider the following classes:

import javax.inject.*

interface Engine { (1)

int getCylinders()

String start()

}

@Singleton

class V6Engine implements Engine { (2)

int cylinders = 6

String start() {

"Starting V6"

}

}

@Singleton

class V8Engine implements Engine { (3)

int cylinders = 8

String start() {

"Starting V8"

}

}

@Singleton

class Vehicle {

final Engine engine

@Inject Vehicle(@Named('v8') Engine engine) { (4)

this.engine = engine

}

String start() {

engine.start() (5)

}

}| 1 | The Engine interface defines the common contract |

| 2 | The V6Engine class is the first implementation |

| 3 | The V8Engine class is the second implementation |

| 4 | The Named annotation is used to indicate the V8Engine implementation is required |

| 5 | Calling the start method prints: "Starting V8" |

You can also declare @Named at the class level of a bean to explicitly define the name of the bean.

Qualifying By Annotation

In addition to being able to qualify by name, you can build your own qualifiers using the Qualifier annotation. For example, consider the following annotation:

import javax.inject.Qualifier

import java.lang.annotation.Retention

import static java.lang.annotation.RetentionPolicy.RUNTIME

@Qualifier

@Retention(RUNTIME)

@interface V8 {

}The above annotation is itself annotated with the @Qualifier annotation to designate it as a qualifier. You can then use the annotation at any injection point in your code. For example:

@Inject Vehicle(@V8 Engine engine) {

this.engine = engine

}Primary and Secondary Beans

Primary is a qualifier that indicates that a bean is the primary bean that should be selected in the case of multiple possible interface implementations.

Consider the following example:

public interface ColorPicker {

String color()

}Given a common interface called ColorPicker that is implemented by multiple classes.

import io.micronaut.context.annotation.Primary;

import io.micronaut.context.annotation.Requires;

import javax.inject.Singleton;

@Primary

@Singleton

public class Green implements ColorPicker {

@Override

public String color() {

return "green";

}

}The Green bean is a ColorPicker, but is annotated with @Primary.

import io.micronaut.context.annotation.Requires;

import javax.inject.Singleton;

@Singleton

public class Blue implements ColorPicker {

@Override

public String color() {

return "blue";

}

}The Blue bean is also a ColorPicker and hence you have two possible candidates when injecting the ColorPicker interface. Since Green is the primary it will always be favoured.

@Controller("/test")

public class TestController {

protected final ColorPicker colorPicker;

public TestController(ColorPicker colorPicker) { (1)

this.colorPicker = colorPicker;

}

@Get

public String index() {

return colorPicker.color();

}

}| 1 | Although there are two ColorPicker beans, Green gets injected due to the @Primary annotation. |

If multiple possible candidates are present and no @Primary is defined then a NonUniqueBeanException will be thrown.

In addition to @Primary, there is also a Secondary annotation which causes the opposite effect and allows de-prioritizing a bean.

3.6 Scopes

Micronaut features an extensible bean scoping mechanism based on JSR-303. The following default scopes are supported:

3.6.1 Built-In Scopes

| Type | Description |

|---|---|

Singleton scope indicates only one instance of the bean should exist |

|

Context scope indicates that the bean should be created at the same time as the |

|

Prototype scope indicates that a new instance of the bean is created each time it is injected |

|

Infrastructure is a |

|

|

|

|

Additional scopes can be added by defining a @Singleton bean that implements the CustomScope interface.

Note that with Micronaut when starting a ApplicationContext by default @Singleton scoped beans are created lazily and on demand. This is by design and to optimize startup time.

If this is presents are problem for your use case you have the option of using the @Context annotation which binds the lifecycle of your object to the lifecycle of the ApplicationContext. In other words when the ApplicationContext is started your bean will be created.

Alternatively you can annotate any @Singleton scoped bean with @Parallel which allows parallel initialization of your bean without impacting overall startup time.

| If your bean fails to initialize in parallel then the application will be automatically shutdown. |

3.6.2 Refreshable Scope

The Refreshable scope is a custom scope that allows a bean’s state to be refreshed via:

-

/refreshendpoint. -

Publication of a RefreshEvent.

The following example, illustrates the @Refreshable scope behavior.

@Refreshable (1)

static class WeatherService {

String forecast

@PostConstruct

void init() {

forecast = "Scattered Clouds ${new Date().format('dd/MMM/yy HH:ss.SSS')}" (2)

}

String latestForecast() {

return forecast

}

}| 1 | The WeatherService is annotated with @Refreshable scope which stores an instance until a refresh event is triggered |

| 2 | The value of the forecast property is set to a fixed value when the bean is created and won’t change until the bean is refreshed |

If you invoke the latestForecast() twice, you will see identical responses such as "Scattered Clouds 01/Feb/18 10:29.199".

When the /refresh endpoint is invoked or a RefreshEvent is published then the instance is invalidated and a new instance is created the next time the object is requested. For example:

applicationContext.publishEvent(new RefreshEvent())3.6.3 Scopes on Meta Annotations

Scopes can be defined on Meta annotations that you can then apply to your classes. Consider the following example meta annotation:

import static java.lang.annotation.RetentionPolicy.RUNTIME;

import io.micronaut.context.annotation.Requires;

import javax.inject.Singleton;

import java.lang.annotation.Documented;

import java.lang.annotation.Retention;

@Requires(classes = Car.class ) (1)

@Singleton (2)

@Documented

@Retention(RUNTIME)

public @interface Driver {

}| 1 | The scope declares a requirement on a Car class using Requires |

| 2 | The annotation is declared as @Singleton |

In the example above the @Singleton annotation is applied to the @Driver annotation which results in every class that is annotated with @Driver being regarded as singleton.

Note that in this case it is not possible to alter the scope when the annotation is applied. For example, the following will not override the scope declared by @Driver and is invalid:

@Driver

@Prototype

class Foo {}If you wish for the scope to be overridable you should instead using the DefaultScope annotation on @Driver which allows a default scope to be specified if none other is present:

@Requires(classes = Car.class )

@DefaultScope(Singleton.class) (1)

@Documented

@Retention(RUNTIME)

public @interface Driver {

}| 1 | DefaultScope is used to declare which scope to be used if non is present |

3.7 Bean Factories

In many cases, you may want to make available as a bean a class that is not part of your codebase such as those provided by third-party libraries. In this case, you cannot annotate the already compiled class. Instead, you should implement a Factory.

A factory is a class annotated with the Factory annotation that provides 1 or more methods annotated with the Bean annotation.

The return types of methods annotated with @Bean are the bean types. This is best illustrated by an example:

import io.micronaut.context.annotation.*

import javax.inject.*

@Singleton

class CrankShaft {

}

class V8Engine implements Engine {

final int cylinders = 8

final CrankShaft crankShaft

V8Engine(CrankShaft crankShaft) {

this.crankShaft = crankShaft

}

String start() {

"Starting V8"

}

}

@Factory

class EngineFactory {

@Bean

@Singleton

Engine v8Engine(CrankShaft crankShaft) {

new V8Engine(crankShaft)

}

}In this case, the V8Engine is built by the EngineFactory class' v8Engine method. Note that you can inject parameters into the method and these parameters will be resolved as beans.

A factory can also have multiple methods annotated with @Bean each one returning a distinct bean type.

If you take this approach, then you should not invoke other methods annotated with @Bean internally within the class. Instead, inject the types via parameters.

|

3.8 Conditional Beans

At times you may want a bean to load conditionally based on various potential factors including the classpath, the configuration, the presence of other beans etc.

The Requires annotation provides the ability to define one or many conditions on a bean.

Consider the following example:

@Singleton

@Requires(beans = DataSource.class)

@Requires(property = "datasource.url")

public class JdbcBookService implements BookService {

DataSource dataSource;

public JdbcBookService(DataSource dataSource) {

this.dataSource = dataSource;

}

}The above bean defines two requirements. The first indicates that a DataSource bean must be present for the bean to load. The second requirement ensures that the datasource.url property is set before loading the JdbcBookService bean.

Kotlin currently does not support repeatable annotations. Use the @Requirements annotation when multiple requires are needed. For example, @Requirements(Requires(…), Requires(…)). See https://youtrack.jetbrains.com/issue/KT-12794 to track this feature.

|

If you have multiple requirements that you find you may need to repeat on multiple beans then you can define a meta-annotation with the requirements:

@Documented

@Retention(RetentionPolicy.RUNTIME)

@Target({ElementType.PACKAGE, ElementType.TYPE})

@Requires(beans = DataSource.class)

@Requires(property = "datasource.url")

public @interface RequiresJdbc {

}In the above example an annotation called RequiresJdbc is defined that can then be used on the JdbcBookService instead:

@RequiresJdbc

public class JdbcBookService implements BookService {

...

}If you have multiple beans that need to fulfill a given requirement before loading then you may want to consider a bean configuration group, as explained in the next section.

Configuration Requirements

The @Requires annotation is very flexible and can be used for a variety of use cases. The following table summarizes some of the possibilities:

| Requirement | Example |

|---|---|

Require the presence of one ore more classes |

|

Require the absence of one ore more classes |

|

Require the presence one or more beans |

|

Require the absence of one or more beans |

|

Require the environment to be applied |

|

Require the environment to not be applied |

|

Require the presence of another configuration package |

|

Require the absence of another configuration package |

|

Require particular SDK version |

|

Requires classes annotated with the given annotations to be available to the application via package scanning |

|

Require a property with an optional value |

|

Require a property to not be part of the configuration |

|

Additional Notes on Property Requirements.

Adding a requirement on a property has some additional functionality. You can require the property to be a certain value, not be a certain value, and use a default in those checks if its not set.

@Requires(property="foo") (1)

@Requires(property="foo", value="John") (2)

@Requires(property="foo", value="John", defaultValue="John") (3)

@Requires(property="foo", notEquals="Sally") (4)| 1 | Requires the property to be "yes", "YES", "true", "TRUE", "y" or "Y" |

| 2 | Requires the property to be "John" |

| 3 | Requires the property to be "John" or not set |

| 4 | Requires the property to not be "Sally" or not set |

Debugging Conditional Beans

If you have multiple conditions and complex requirements it may become difficult to understand why a particular bean has not been loaded.

To help resolve issues with conditional beans you can enable debug logging for the io.micronaut.context.condition package which will log the reasons why beans were not loaded.

<logger name="io.micronaut.context.condition" level="DEBUG"/>3.9 Bean Replacement

One significant difference between Micronaut’s Dependency Injection system and Spring is the way beans can be replaced.

In a Spring application, beans have names and can effectively be overridden simply by creating a bean with the same name, regardless of the type of the bean. Spring also has the notion of bean registration order, hence in Spring Boot you have @AutoConfigureBefore and @AutoConfigureAfter the control how beans override each other.

This strategy leads to difficult to debug problems, for example:

-

Bean loading order changes, leading to unexpected results

-

A bean with the same name overrides another bean with a different type

To avoid these problems, Micronaut’s DI has no concept of bean names or load order. Beans have a type and a Qualifier. You cannot override a bean of a completely different type with another.

A useful benefit of Spring’s approach is that it allows overriding existing beans to customize behaviour. In order to support the same ability, Micronaut’s DI provides an explicit @Replaces annotation, which integrates nicely with support for Conditional Beans and clearly documents and expresses the intention of the developer.

Any existing bean can be replaced by another bean that declares @Replaces. For example, consider the following class:

@Singleton

@Requires(beans = DataSource.class)

public class JdbcBookService implements BookService {

DataSource dataSource;

public JdbcBookService(DataSource dataSource) {

this.dataSource = dataSource;

}

}You can define a class in src/test/java that replaces this class just for your tests:

@Replaces(JdbcBookService.class) (1)

@Singleton

public class MockBookService implements BookService {

Map<String, Book> bookMap = new LinkedHashMap<>();

@Override

public Book findBook(String title) {

return bookMap.get(title);

}

}| 1 | The MockBookService declares that it replaces JdbcBookService |

The @Replaces annotation also supports a factory argument. That argument allows the replacement of factory beans in their entirety or specific types created by the factory.

For example, it may be desired to replace all or part of the given factory class:

@Factory

public class BookFactory {

@Singleton

Book novel() {

return new Book("A Great Novel");

}

@Singleton

TextBook textBook() {

return new TextBook("Learning 101");

}

}| To replace a factory in its entirety, it is necessary that your factory methods match the return types of all of the methods in the replaced factory. |

In this example, the BookFactory#textBook() will not be replaced because this factory does not have a factory method that returns a TextBook.

@Factory

@Replaces(factory = BookFactory.class)

public class CustomBookFactory {

@Singleton

Book otherNovel() {

return new Book("An OK Novel");

}

}It may be the case that you don’t wish for the factory methods to be replaced, except for a select few. For that use case, you can apply the @Replaces annotation on the method and denote the factory that it should apply to.

@Factory

public class TextBookFactory {

@Singleton

@Replaces(value = TextBook.class, factory = BookFactory.class)

TextBook textBook() {

return new TextBook("Learning 305");

}

}The BookFactory#novel() method will not be replaced because the TextBook class is defined in the annotation.

3.10 Bean Configurations

A bean @Configuration is a grouping of multiple bean definitions within a package.

The @Configuration annotation is applied at the package level and informs Micronaut that the beans defined with the package form a logical grouping.

The @Configuration annotation is typically applied to package-info class. For example:

@Configuration

package my.package

import io.micronaut.context.annotation.ConfigurationWhere this grouping becomes useful is when the bean configuration is made conditional via the @Requires annotation. For example:

@Configuration

@Requires(beans = javax.sql.DataSource)

package my.packageIn the above example, all bean definitions within the annotated package will only be loaded and made available if a javax.sql.DataSource bean is present. This allows you to implement conditional auto-configuration of bean definitions.

3.11 Life-Cycle Methods

If you wish for a particular method to be invoked when a bean is constructed then you can use the javax.annotation.PostConstruct annotation:

import javax.annotation.PostConstruct (1)

import javax.inject.Singleton

@Singleton

class V8Engine implements Engine {

int cylinders = 8

boolean initialized = false (2)

String start() {

if(!initialized) throw new IllegalStateException("Engine not initialized!")

return "Starting V8"

}

@PostConstruct (3)

void initialize() {

this.initialized = true

}

}| 1 | The PostConstruct annotation is imported |

| 2 | A field is defined that requires initialization |

| 3 | A method is annotated with @PostConstruct and will be invoked once the object is constructed and fully injected. |

3.12 Context Events

Micronaut supports a general event system through the context. The ApplicationEventPublisher API is used to publish events and the ApplicationEventListener API is used to listen to events. The event system is not limited to the events that Micronaut publishes and can be used for custom events created by the users.

Publishing Events

The ApplicationEventPublisher API supports events of any type, however all events that Micronaut publishes extend ApplicationEvent.

To publish an event, obtain an instance of ApplicationEventPublisher either directly from the context or through dependency injection, and execute the publishEvent method with your event object.

@Singleton

public class MyBean {

@Inject ApplicationEventPublisher eventPublisher;

void doSomething() {

eventPublisher.publishEvent(...);

}

}

Publishing an event is synchronous by default! The publishEvent method will not return until all listeners have been executed. Move this work off to a thread pool if it is time intensive.

|

Listening for Events

To listen to an event, register a bean that implements ApplicationEventListener where the generic type is the type of event the listener should be executed for.

ApplicationEventListener@Singleton

public class DoOnStartup implements ApplicationEventListener<ServiceStartedEvent> {

@Override

void onApplicationEvent(ServiceStartedEvent event) {

...

}

}| The supports method can be overridden to further clarify events that should be processed. |

Alternatively you can use the @EventListener annotation if you do not wish to specifically implement an interface:

@EventListenerimport io.micronaut.runtime.event.annotation.EventListener;

...

@Singleton

public class DoOnStartup {

@EventListener

void onStartup(ServiceStartedEvent event) {

...

}

}If your listener performs work that could take a while then you can use the @Async annotation to run the operation on a separate thread:

@EventListenerimport io.micronaut.runtime.event.annotation.EventListener;

import io.micronaut.scheduling.annotation.Async;

...

@Singleton

public class DoOnStartup {

@EventListener

@Async

void onStartup(ServiceStartedEvent event) {

...

}

}The event listener will by default run on the scheduled executor. You can configure this thread pool as required in application.yml:

micronaut:

executors:

scheduled:

type: scheduled

core-pool-size: 303.13 Bean Events

You can hook into the creation of beans using one of the following interfaces:

-

BeanInitializedEventListener - allows modifying or replacing of a bean after the properties have been set but prior to

@PostConstructevent hooks. -

BeanCreatedEventListener - allows modifying or replacing of a bean after the bean is fully initialized and all

@PostConstructhooks called.

The BeanInitializedEventListener interface is commonly used in combination with Factory beans. Consider the following example:

import javax.inject.*

class V8Engine implements Engine {

final int cylinders = 8

double rodLength (1)

String start() {

return "Starting V${cylinders} [rodLength=$rodLength]"

}

}

@Factory

class EngineFactory {

private V8Engine engine

double rodLength = 5.7

@PostConstruct

void initialize() {

engine = new V8Engine(rodLength: rodLength) (2)

}

@Bean

@Singleton

Engine v8Engine() {

return engine (3)

}

}

@Singleton

class EngineInitializer implements BeanInitializedEventListener<EngineFactory> { (4)

@Override

EngineFactory onInitialized(BeanInitializingEvent<EngineFactory> event) {

EngineFactory engineFactory = event.bean

engineFactory.rodLength = 6.6 (5)

return event.bean

}

}| 1 | The V8Engine class defines a rodLength property |

| 2 | The EngineFactory initializes the value of rodLength and creates the instance |

| 3 | The created instance is returned as a Bean |

| 4 | The BeanInitializedEventListener interface is implemented to listen for the initialization of the factory |

| 5 | Within the onInitialized method the rodLength is overridden prior to the engine being created by the factory bean. |

The BeanCreatedEventListener interface is more typically used to decorate or enhance a fully initialized bean by creating a proxy for example.

3.14 Bean Annotation Metadata

The methods provided by Java’s AnnotatedElement API in general don’t provide the ability to introspect annotations without loading the annotations themselves, nor do they provide any ability to introspect annotation stereotypes (Often called meta-annotations, an annotation stereotype is where an annotation is annotated with another annotation, essentially inheriting its behaviour).

To solve this problem many frameworks produce runtime metadata or perform expensive reflection to analyze the annotations of a class.

Micronaut instead produces this annotation metadata at compile time, avoiding expensive reflection and saving on memory.

The BeanContext API can be used to obtain a reference to a BeanDefinition which implements the AnnotationMetadata interface.

For example the following code will obtain all bean definitions annotated with a particular stereotype:

BeanContext beanContext = ... // obtain the bean context

Collection<BeanDefinition> definitions =

beanContext.getBeanDefinitions(Qualifiers.byStereotype(Controller.class))

for(BeanDefinition definition : definitions) {

AnnotationValue<Controller> controllerAnn = definition.getAnnotation(Controller.class);

// do something with the annotation

}The above example will find all BeanDefinition annotated with @Controller regardless whether @Controller is used directly or inherited via an annotation stereotype.

Note that the getAnnotation method and the variations of the method return a AnnotationValue type and not a Java annotation. This is by design, and you should generally try to work with this API when reading annotation values, the reason being that synthesizing a proxy implementation is worse from a performance and memory consumption perspective.

If you absolutely require a reference to an annotation instance you can use the synthesize method, which will create a runtime proxy that implements the annotation interface:

Controller controllerAnn = definition.synthesize(Controller.class);This approach is not recommended however, as it requires reflection and increases memory consumption due to the use of runtime created proxies and should be used as a last resort (for example if you need to an instance of the annotation to integrate with a third party library).

Aliasing / Mapping Annotations

There are times when you may want to alias the value one of annotation member to the value of annotation annotation member. To do this you can use the @AliasFor annotation to alias the value of one member to the value of another.

A common use case is for example when an annotation defines value() member, but also supports other members. For example the @Client annotation:

public @interface Client {

/**

* @return The URL or service ID of the remote service

*/

@AliasFor(member = "id") (1)

String value() default "";

/**

* @return The ID of the client

*/

@AliasFor(member = "value") (2)

String id() default "";

}| 1 | The value member also sets the id member |

| 2 | The id member also sets the value member |

With these aliases in place, regardless whether you define @Client("foo") or @Client(id="foo") both the value and id members are always set, making it much easier to parse and deal with the annotation.

If you do not have control over the annotation then another approach is to use a AnnotationMapper. To create a AnnotationMapper you must following the following steps:

-

Implement the AnnotationMapper interface

-

Define a

META-INF/services/io.micronaut.inject.annotation.AnnotationMapperfile referencing the implementation class -

Add the JAR file containing the implementation to the

annotationProcessorclasspath (kaptfor Kotlin)

Because AnnotationMapper implementations need to be on the annotation processor classpath they should generally be in a project that includes few external dependencies to avoid polluting the annotation processor classpath.

|

As an example the the AnnotationMapper that maps the javax.annotation.security.PermitAll standard Java annotation to the internal Micronaut Secured annotation looks like the following:

@Internal

public class PermitAllAnnotationMapper implements TypedAnnotationMapper<PermitAll> { (1)

@Override

public Class<PermitAll> annotationType() {

return PermitAll.class;

}

@Override

public List<AnnotationValue<?>> map(AnnotationValue<PermitAll> annotation, VisitorContext visitorContext) { (2)

List<AnnotationValue<?>> annotationValues = new ArrayList<>(1);

annotationValues.add(

AnnotationValue.builder(Secured.class) (3)

.value(SecurityRule.IS_ANONYMOUS) (4)

.build()

);

return annotationValues;

}

}| 1 | The annotation type to be mapped is specified as a generic type argument. |

| 2 | The map method receives a AnnotationValue with the values for the annotation. |

| 3 | One or more annotations can be returned, in this case @Secured. |

| 4 | Annotations values can be provided. |

| The example above implements the TypedAnnotationMapper interface which requires the annotation class itself to be on the annotation processor classpath. If that is undesirable (such as for projects that mix annotations with runtime code) then you should use NamedAnnotationMapper instead. |

3.15 Micronaut Beans And Spring

The MicronautBeanProcessor

class is a BeanFactoryPostProcessor which will add Micronaut beans to a

Spring Application Context. An instance of MicronautBeanProcessor should

be added to the Spring Application Context. MicronautBeanProcessor requires

a constructor parameter which represents a list of the types of

Micronaut beans which should be added the Spring Application Context. The

processor may be used in any Spring application. As an example, a Grails 3

application could take advantage of MicronautBeanProcessor to add all of the

Micronaut HTTP Client beans to the Spring Application Context with something

like the folowing:

// grails-app/conf/spring/resources.groovy

import io.micronaut.spring.beans.MicronautBeanProcessor

import io.micronaut.http.client.annotation.Client

beans = {

httpClientBeanProcessor MicronautBeanProcessor, Client

}Multiple types may be specified:

// grails-app/conf/spring/resources.groovy

import io.micronaut.spring.beans.MicronautBeanProcessor

import io.micronaut.http.client.annotation.Client

import com.sample.Widget

beans = {

httpClientBeanProcessor MicronautBeanProcessor, [Client, Widget]

}In a non-Grails application something similar may be specified using any of Spring’s bean definition styles:

@Configuration

class ByAnnotationTypeConfig {

@Bean

MicronautBeanProcessor beanProcessor() {

new MicronautBeanProcessor(Prototype, Singleton)

}

}3.16 Android Support

Since Micronaut dependency injection is based on annotation processors and doesn’t rely on reflection, it can be used on Android when using the Android plugin 3.0.0 or above.

This allows you to use the same application framework for both your Android client and server implementation.

Configuring Your Android Build

To get started you must add the Micronaut annotation processors to the processor classpath using the annotationProcessor dependency configuration.

The Micronaut micronaut-inject-java dependency should be included in both the annotationProcessor and compileOnly scopes of your Android build configuration:

dependencies {

...

annotationProcessor "io.micronaut:micronaut-inject-java:1.0.0"

compileOnly "io.micronaut:micronaut-inject-java:1.0.0"

...

}If you use lint as part of your build you may also need to disable the invalid packages check since Android includes a hard coded check that regards the javax.inject package as invalid unless you are using Dagger:

android {

...

lintOptions {

lintOptions { warning 'InvalidPackage' }

}

}You can find more information on configuring annotations processors in the Android documentation.

Micronaut inject-java dependency uses Android Java 8 support features.

|

Enabling Dependency Injection

Once you have configured the classpath correctly, the next step is start the ApplicationContext.

The following example demonstrates creating a subclass of android.app.Application for that purpose:

import android.app.Activity;

import android.app.Application;

import android.os.Bundle;

import io.micronaut.context.ApplicationContext;

import io.micronaut.context.env.Environment;

public class BaseApplication extends Application { (1)

private ApplicationContext ctx;

public BaseApplication() {

super();

}

@Override

public void onCreate() {

super.onCreate();

ctx = ApplicationContext.run(MainActivity.class, Environment.ANDROID); (2)

registerActivityLifecycleCallbacks(new ActivityLifecycleCallbacks() { (3)

@Override

public void onActivityCreated(Activity activity, Bundle bundle) {

ctx.inject(activity);

}

... // shortened for brevity, it is not necessary to implement other methods

});

}

@Override

public void onTerminate() {

super.onTerminate();

if(ctx != null && ctx.isRunning()) { (4)

ctx.stop();

}

}

}| 1 | Extend the android.app.Application class |

| 2 | Run the ApplicationContext with the ANDROID environment |

| 3 | To allow dependency injection of Android Activity instances register a ActivityLifecycleCallbacks instance |

| 4 | Stop the ApplicationContext when the application terminates |

4 Application Configuration

Configuration in Micronaut takes inspiration from both Spring Boot and Grails, integrating configuration properties from multiple sources directly into the core IoC container.

Configuration can by default be provided in either Java properties, YAML, JSON or Groovy files. The convention is to search for a file called application.yml, application.properties, application.json or application.groovy.

In addition, just like Spring and Grails, Micronaut allows overriding any property via system properties or environment variables.

Each source of configuration is modeled with the PropertySource interface and the mechanism is extensible allowing the implementation of additional PropertySourceLoader implementations.

4.1 The Environment

The application environment is modelled by the Environment interface, which allows specifying one or many unique environment names when creating an ApplicationContext.

ApplicationContext applicationContext = ApplicationContext.run("test", "android");

Environment environment = applicationContext.getEnvironment();

assertTrue(environment.getActiveNames().contains("test"));

assertTrue(environment.getActiveNames().contains("android"));The active environment names serve the purpose of allowing loading different configuration files depending on the environment and also using the @Requires annotation to conditionally load beans or bean @Configuration packages.

In addition, Micronaut will attempt to detect the current environments. For example within a Spock or JUnit test the TEST environment will be automatically active.

Additional active environments can be specified using the micronaut.environments system property or the MICRONAUT_ENVIRONMENTS environment variable. These can be specified as a comma separated list. For example:

$ java -Dmicronaut.environments=foo,bar -jar myapp.jarThe above activates environments called foo and bar.

Finally, the Cloud environment names are also detected. See the section on Cloud Configuration for more information.

4.2 Externalized Configuration with PropertySources

Additional PropertySource instances can be added to the environment prior to initializing the ApplicationContext.

ApplicationContext applicationContext = ApplicationContext.run(

PropertySource.of(

"test",

CollectionUtils.mapOf(

"micronaut.server.host", "foo",

"micronaut.server.port", 8080

)

),

"test", "android");

Environment environment = applicationContext.getEnvironment();

assertEquals(

environment.getProperty("micronaut.server.host", String.class).orElse("localhost"),

"foo"

);The PropertySource.of method can be used to create a ProperySource from a map of values.

Alternatively one can register a PropertySourceLoader by creating a META-INF/services/io.micronaut.context.env.PropertySourceLoader containing a reference to the class name of the PropertySourceLoader.

Included PropertySource Loaders

Micronaut by default contains PropertySourceLoader implementations that load properties from the given locations and priority:

-

Command line arguments

-

Properties from

SPRING_APPLICATION_JSON(for Spring compatibility) -

Properties from

MICRONAUT_APPLICATION_JSON -

Java System Properties

-

OS environment variables

-

Enviroment-specific properties from

application-{environment}.{extension}(Either.properties,.json,.ymlor.groovyproperty formats supported) -

Application-specific properties from

application.{extension}(Either.properties,.json,.ymlor.groovyproperty formats supported)

To use custom properties from local files, you can either call your application with -Dmicronaut.config.files=myfile.yml or set the environment variable MICRONAUT_CONFIG_FILES=myfile.yml. The value can be a comma-separated list.

|

Property Value Placeholders

Micronaut includes a property placeholder syntax which can be used to reference configuration properties both within configuration values and with any Micronaut annotation (see @Value and the section on Configuration Injection).

| Programmatic usage is also possible via the PropertyPlaceholderResolver interface. |

The basic syntax is to wrap a reference to a property in ${…}. For example in application.yml:

myapp:

endpoint: http://${micronaut.server.host}:${micronaut.server.port}/fooThe above example embeds references to the micronaut.server.host and micronaut.server.port properties.

You can specify default values by defining a value after the : character. For example:

myapp:

endpoint: http://${micronaut.server.host:localhost}:${micronaut.server.port:8080}/fooThe above example will default to localhost and port 8080 if no value is found (rather than throwing an exception). Note that if default value itself contains a : character, you should escape it using back ticks:

myapp:

endpoint: ${server.address:`http://localhost:8080`}/fooThe above example tries to read a server.address property otherwise fallbacks back to http://localhost:8080, since the address has a : character we have to escape it with back ticks.

Property Value Binding

Note that these property references should always be in kebab case (lowercase and hyphen-separated) when placing references in code or in placeholder values. In other words you should use for example micronaut.server.default-charset and not micronaut.server.defaultCharset.

Micronaut still allows specifying the latter in configuration, but normalizes the properties into kebab case form to optimize memory consumption and reduce complexity when resolving properties. The following table summarizes how properties are normalized from different sources:

| Configuration Value | Resulting Properties | Property Source |

|---|---|---|

|

|

Properties, YAML etc. |

|

|

Properties, YAML etc. |

|

|

Properties, YAML etc. |

|

|

Environment Variable |

|

|

Environment Variable |

Environment variables are given special treatment to allow the definition of environment variables to be more flexible.

Using Random Properties

You can use random values by using the following properties. These can be used in configuration files as variables like the following.

micronaut:

application:

name: myapplication

instance:

id: ${random.shortuuid}| Property | Value |

|---|---|

random.port |

An available random port number |

random.int |

Random int |

random.integer |

Random int |

random.long |

Random long |

random.float |

Random float |

random.shortuuid |

Random UUID of only 10 chars in length (Note: As this isn’t full UUID, collision COULD occur) |

random.uuid |

Random UUID with dashes |

random.uuid2 |

Random UUID without dashes |

4.3 Configuration Injection

You can inject configuration values into beans with Micronaut using the @Value annotation.

Using the @Value Annotation

Consider the following example:

import io.micronaut.context.annotation.Value

import javax.inject.Singleton

@Singleton

class EngineImpl implements Engine {

@Value('${my.engine.cylinders:6}') (1)

protected int cylinders

@Override

int getCylinders() {

this.cylinders

}

String start() { (2)

"Starting V${cylinders} Engine"

}

}| 1 | The @Value annotation accepts a string that can have embedded placeholder values (the default value can be provided by specifying a value after the colon : character). |

| 2 | The injected value can then be used within code. |

Note that @Value can also be used to inject a static value, for example the following will inject the number 10:

@Value("10")

int number;However it is definitely more useful when used to compose injected values combining static content and placeholders. For example to setup a URL:

@Value("http://${my.host}:${my.port}")

URL url;In the above example the URL is constructed from 2 placeholder properties that must be present in configuration: my.host and my.port.

Remember that to specify a default value in a placeholder expression, you should use the colon : character, however if the default you are trying to specify has a colon then you should escape the value with back ticks. For example:

@Value("${my.url:`http://foo.com`}")

URL url;Note that there is nothing special about @Value itself regarding the resolution of property value placeholders.

Due to Micronaut’s extensive support for annotation metadata you can in fact use property placeholder expressions on any annotation. For example, to make the path of a @Controller configurable you can do:

@Controller("${hello.controller.path:/hello}")

class HelloController {

...

}In the above case if hello.controller.path is specified in configuration then the controller will be mapped to the path specified otherwise it will be mapped to /hello.

You can also make the target server for @Client configurable (although service discovery approaches are often better), for example:

@Client("${my.server.url:`http://localhost:8080`}")

interface HelloClient {

...

}In the above example the property my.server.url can be used to configure the client otherwise the client will fallback to a localhost address.

Using the @Property Annotation

Recall that the @Value annotation receives a String value which is a mix of static content and placeholder expressions. This can lead to confusion if you attempt to do the following:

@Value@Value("my.url")

String url;In the above case the value my.url will be injected and set to the url field and not the value of the my.url property from your application configuration, this is because @Value only resolves placeholders within the value specified to it.

If you wish to inject a specific property name then you may be better off using @Property:

@Property@Property(name = "my.url")

String url;The above will instead inject the value of the my.url property resolved from application configuration. You can also use this feature to resolve sub maps. For example, consider the following configuration:

application.yml configurationdatasources:

default:

name: 'mydb'

jpa:

default:

properties:

hibernate:

hbm2ddl:

auto: update

show_sql: trueIf you wish to resolve a flattened map containing only the properties starting with hibernate then you can do so with @Property, for example:

@Property@Property(name = "jpa.default.properties")

Map<String, String> jpaProperties;The injected map will contain the keys hibernate.hbm2ddl.auto and hibernate.show_sql and their values.

| The @MapFormat annotation can be used to customize the injected map depending whether you want nested keys, flat keys and it allows customization of the key style via the StringConvention enum. |

4.4 Configuration Properties

You can create type safe configuration by creating classes that are annotated with @ConfigurationProperties.

Micronaut will produce a reflection-free @ConfigurationProperties bean and will also at compile time calculate the property paths to evaluate, greatly improving the speed and efficiency of loading @ConfigurationProperties.

An example of a configuration class can be seen below:

import io.micronaut.context.annotation.ConfigurationProperties

import javax.validation.constraints.Min

import javax.validation.constraints.NotBlank

@ConfigurationProperties('my.engine') (1)

class EngineConfig {

@NotBlank (2)

String manufacturer = "Ford" (3)

@Min(1L)

int cylinders

CrankShaft crankShaft = new CrankShaft()

@ConfigurationProperties('crank-shaft')

static class CrankShaft { (4)

Optional<Double> rodLength = Optional.empty() (5)

}

}| 1 | The @ConfigurationProperties annotation takes the configuration prefix |

| 2 | You can use javax.validation to validate the configuration |

| 3 | Default values can be assigned to the property |

| 4 | Static inner classes can provided nested configuration |

| 5 | Optional configuration values can be wrapped in a java.util.Optional |

Once you have prepared a type safe configuration it can simply be injected into your objects like any other bean:

@Singleton

class EngineImpl implements Engine {

final EngineConfig config

EngineImpl(EngineConfig config) { (1)

this.config = config

}

@Override

int getCylinders() {

config.cylinders

}

String start() { (2)

"${config.manufacturer} Engine Starting V${config.cylinders} [rodLength=${config.crankShaft.rodLength.orElse(6.0d)}]"

}

}| 1 | Inject the EngineConfig bean |

| 2 | Use the configuration properties |

Configuration values can then be supplied from one of the PropertySource instances. For example:

ApplicationContext applicationContext = ApplicationContext.run(

['my.engine.cylinders': '8'],

"test"

)

Vehicle vehicle = applicationContext

.getBean(Vehicle)

println(vehicle.start())The above example prints: "Ford Engine Starting V8 [rodLength=6.0]"

Note for more complex configurations you can structure @ConfigurationProperties beans through inheritance.

For example creating a subclass of EngineConfig with @ConfigurationProperties('bar') will resolve all properties under the path my.engine.bar.

Property Type Conversion

When resolving properties Micronaut will use the ConversionService bean to convert properties. You can register additional converters for types not supported by micronaut by defining beans that implement the TypeConverter interface.

Micronaut features some built-in conversions that are useful, which are detailed below.

Duration Conversion

Durations can be specified by appending the unit with a number. Supported units are s, ms, m etc. The following table summarizes examples:

| Configuration Value | Resulting Value |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

Duration of 15 minutes using ISO-8601 format |

For example to configure the default HTTP client read timeout:

micronaut:

http:

client:

read-timeout: 15sList / Array Conversion

Lists and arrays can be specified in Java properties files as comma-separated values or in YAML using native YAML lists. The generic types are used to convert the values. For example in YAML:

my:

app:

integers:

- 1

- 2

urls:

- http://foo.com

- http://bar.comOr in Java properties file format:

my.app.integers=1,2

my.app.urls=http://foo.com,http://bar.comAlternatively you can use an index:

my.app.integers[0]=1

my.app.integers[1]=2For the above example configurations you can define properties to bind to with the target type supplied via generics:

List<Integer> integers;

List<URL> urls;Readable Bytes

You can annotate any setter parameter with @ReadableBytes to allow the value to be set using a shorthand syntax for specifying bytes, kilobytes etc. For example the following is taken from HttpClientConfiguration:

@ReadableBytespublic void setMaxContentLength(@ReadableBytes int maxContentLength) {

this.maxContentLength = maxContentLength;

}With the above in place you can set micronaut.http.client.max-content-length using the following values:

| Configuration Value | Resulting Value |

|---|---|

|

10 megabytes |

|

10 kilobytes |

|

10 gigabytes |

|

A raw byte length |

Formatting Dates

The @Format annotation can be used on any setter to allow the date format to be specified when binding javax.time date objects.

@Format for Datespublic void setMyDate(@Format("yy-mm-dd") LocalDate date) {

this.myDate = date;

}Configuration Builder

Many existing frameworks and tools already use builder-style classes to construct configuration.

To support the ability for a builder style class to be populated with configuration values, the @ConfigurationBuilder annotation can be used. ConfigurationBuilder can be added to a field or method in a class annotated with @ConfigurationProperties.

Since there is no consistent way to define builders in the Java world, one or more method prefixes can be specified in the annotation to support builder methods like withXxx or setXxx. If the builder methods have no prefix, assign an empty string to the parameter.

A configuration prefix can also be specified to tell Micronaut where to look for configuration values. By default, the builder methods will use the configuration prefix defined at the class level @ConfigurationProperties annotation.

For example:

import io.micronaut.context.annotation.ConfigurationBuilder

import io.micronaut.context.annotation.ConfigurationProperties

@ConfigurationProperties('my.engine') (1)

class EngineConfig {

@ConfigurationBuilder(prefixes = "with") (2)

EngineImpl.Builder builder = EngineImpl.builder()

@ConfigurationBuilder(prefixes = "with", configurationPrefix = "crank-shaft") (3)

CrankShaft.Builder crankShaft = CrankShaft.builder()

}| 1 | The @ConfigurationProperties annotation takes the configuration prefix |

| 2 | The first builder can be configured with the class configuration prefix |

| 3 | The second builder can be configured with the class configuration prefix + the configurationPrefix value. |

By default, only builder methods that take a single argument are supported. To support methods with no arguments, set the allowZeroArgs parameter of the annotation to true.

|

Just like in the previous example, we can construct an EngineImpl. Since we are using a builder, a factory class can be used to build the engine from the builder.

import io.micronaut.context.annotation.Bean

import io.micronaut.context.annotation.Factory

import javax.inject.Singleton

@Factory

class EngineFactory {

@Bean

@Singleton

EngineImpl buildEngine(EngineConfig engineConfig) {

engineConfig.builder.build(engineConfig.crankShaft)

}

}The engine that was returned can then be injected anywhere an engine is depended on.

Configuration values can be supplied from one of the PropertySource instances. For example:

ApplicationContext applicationContext = ApplicationContext.run(

['my.engine.cylinders':'4',

'my.engine.manufacturer': 'Subaru',

'my.engine.crank-shaft.rod-length': 4],

"test"

)

Vehicle vehicle = applicationContext

.getBean(Vehicle)

println(vehicle.start())The above example prints: "Subaru Engine Starting V4 [rodLength=4.0]"

MapFormat

For some use cases it may be desirable to accept a map of arbitrary configuration properties that can be supplied to a bean, especially if the bean represents a third-party API where not all of the possible configuration properties are known by the developer. For example, a datasource may accept a map of configuration properties specific to a particular database driver, allowing the user to specify any desired options in the map without coding every single property explicitly.

For this purpose, the MapFormat annotation allows you to bind a map to a single configuration property, and specify whether to accept a flat map of keys to values, or a nested map (where the values may be additional maps].

import io.micronaut.core.convert.format.MapFormat

@ConfigurationProperties('my.engine')

class EngineConfig {

@Min(1L)

int cylinders

@MapFormat(transformation = MapFormat.MapTransformation.FLAT) (1)

Map<Integer, String> sensors

}| 1 | Note the transformation argument to the annotation; possible values are MapTransformation.FLAT (for flat maps) and MapTransformation.NESTED (for nested maps) |

@Singleton

class EngineImpl implements Engine {

@Inject EngineConfig config

@Override

Map getSensors() {

config.sensors

}

String start() {

"Engine Starting V${config.cylinders} [sensors=${sensors.size()}]"

}

}Now a map of properties can be supplied to the my.engine.sensors configuration property.