implementation("io.micronaut.azure:micronaut-azure-sdk")Table of Contents

Micronaut Azure

Micronaut projects for Microsoft Azure

Version: 5.8.0

1 Introduction

This project provides integrations between Micronaut and Microsoft Azure.

2 Release History

For this project, you can find a list of releases (with release notes) here:

3 What's new?

-

Micronaut Azure HTTP Functions pass the Micronaut HTTP Server TCK (Test Compatibility Kit). You can write your code as if you target the Netty runtime but deploy it as an Azure Function with an HTTP trigger.

4 Breaking Changes

5 Azure SDK

The Azure SDK module provides integration between Micronaut and Microsoft Azure SDK for Java.

First you need add a dependency on the azure-sdk module:

<dependency>

<groupId>io.micronaut.azure</groupId>

<artifactId>micronaut-azure-sdk</artifactId>

</dependency>5.1 Authentication

The micronaut-azure-sdk module supports these authentication options:

DefaultAzureCredential

The DefaultAzureCredential is appropriate for most scenarios where the application ultimately runs in the Azure Cloud. It combines credentials that are commonly used to authenticate when deployed, with credentials that are used to authenticate in a development environment.

The DefaultAzureCredential is used when no other credential type is specified or explicitly enabled.

EnvironmentCredential

The EnvironmentCredential is credential provider that provides token credentials based on environment variables. The environment variables expected are:

-

AZURE_CLIENT_ID -

AZURE_CLIENT_SECRET -

AZURE_TENANT_ID

or:

-

AZURE_CLIENT_ID -

AZURE_CLIENT_CERTIFICATE_PATH -

AZURE_TENANT_ID

or:

-

AZURE_CLIENT_ID -

AZURE_USERNAME -

AZURE_PASSWORD

ClientCertificateCredential

The ClientCertificateCredential authenticates the created service principal through its client certificate. Visit Client certificate credential for more details.

The ClientCertificateCredential supports both PFX and PEM certificate file types.

azure.credential.client-certificate.client-id=<client-id>

azure.credential.client-certificate.pem-certificate-path=<path to pem certificate>azure:

credential:

client-certificate:

client-id: <client-id>

pem-certificate-path: <path to pem certificate>[azure]

[azure.credential]

[azure.credential.client-certificate]

client-id="<client-id>"

pem-certificate-path="<path to pem certificate>"azure {

credential {

clientCertificate {

clientId = "<client-id>"

pemCertificatePath = "<path to pem certificate>"

}

}

}{

azure {

credential {

client-certificate {

client-id = "<client-id>"

pem-certificate-path = "<path to pem certificate>"

}

}

}

}{

"azure": {

"credential": {

"client-certificate": {

"client-id": "<client-id>",

"pem-certificate-path": "<path to pem certificate>"

}

}

}

}azure.credential.client-certificate.client-id=<client-id>

azure.credential.client-certificate.pfx-certificate-path=<path to pfx certificate>

azure.credential.client-certificate.pfx-certificate-password=<pfx certificate password>azure:

credential:

client-certificate:

client-id: <client-id>

pfx-certificate-path: <path to pfx certificate>

pfx-certificate-password: <pfx certificate password>[azure]

[azure.credential]

[azure.credential.client-certificate]

client-id="<client-id>"

pfx-certificate-path="<path to pfx certificate>"

pfx-certificate-password="<pfx certificate password>"azure {

credential {

clientCertificate {

clientId = "<client-id>"

pfxCertificatePath = "<path to pfx certificate>"

pfxCertificatePassword = "<pfx certificate password>"

}

}

}{

azure {

credential {

client-certificate {

client-id = "<client-id>"

pfx-certificate-path = "<path to pfx certificate>"

pfx-certificate-password = "<pfx certificate password>"

}

}

}

}{

"azure": {

"credential": {

"client-certificate": {

"client-id": "<client-id>",

"pfx-certificate-path": "<path to pfx certificate>",

"pfx-certificate-password": "<pfx certificate password>"

}

}

}

}Optionally you can configure the tenant id by setting the property azure.credential.client-certificate.tenant-id.

ClientSecretCredential

The ClientSecretCredential authenticates the created service principal through its client secret (password). See more on Client secret credential for more details.

azure.credential.client-secret.client-id=<client-id>

azure.credential.client-secret.tenant-id=<tenant-id>

azure.credential.client-secret.secret=<secret>azure:

credential:

client-secret:

client-id: <client-id>

tenant-id: <tenant-id>

secret: <secret>[azure]

[azure.credential]

[azure.credential.client-secret]

client-id="<client-id>"

tenant-id="<tenant-id>"

secret="<secret>"azure {

credential {

clientSecret {

clientId = "<client-id>"

tenantId = "<tenant-id>"

secret = "<secret>"

}

}

}{

azure {

credential {

client-secret {

client-id = "<client-id>"

tenant-id = "<tenant-id>"

secret = "<secret>"

}

}

}

}{

"azure": {

"credential": {

"client-secret": {

"client-id": "<client-id>",

"tenant-id": "<tenant-id>",

"secret": "<secret>"

}

}

}

}UsernamePasswordCredential

The UsernamePasswordCredential helps to authenticate a public client application using the user credentials that don’t require multi-factor authentication. Visit Username password credential for more details.

azure.credential.username-password.client-id=<client-id>

azure.credential.username-password.username=<username>

azure.credential.username-password.password=<password>azure:

credential:

username-password:

client-id: <client-id>

username: <username>

password: <password>[azure]

[azure.credential]

[azure.credential.username-password]

client-id="<client-id>"

username="<username>"

password="<password>"azure {

credential {

usernamePassword {

clientId = "<client-id>"

username = "<username>"

password = "<password>"

}

}

}{

azure {

credential {

username-password {

client-id = "<client-id>"

username = "<username>"

password = "<password>"

}

}

}

}{

"azure": {

"credential": {

"username-password": {

"client-id": "<client-id>",

"username": "<username>",

"password": "<password>"

}

}

}

}Optionally you can configure the tenant id by setting the property azure.credential.username-password.tenant-id.

ManagedIdentityCredential

The ManagedIdentityCredential authenticates the managed identity (system or user assigned) of an Azure resource. So, if the application is running inside an Azure resource that supports Managed Identity through IDENTITY/MSI, IMDS endpoints, or both, then this credential will get your application authenticated, and offers a great secretless authentication experience. Visit Managed Identity credential for more details.

azure.credential.managed-identity.enabled=trueazure:

credential:

managed-identity:

enabled: true[azure]

[azure.credential]

[azure.credential.managed-identity]

enabled=trueazure {

credential {

managedIdentity {

enabled = true

}

}

}{

azure {

credential {

managed-identity {

enabled = true

}

}

}

}{

"azure": {

"credential": {

"managed-identity": {

"enabled": true

}

}

}

}Note, that for user-assigned identity you have to also set the azure.credential.managed-identity.client-id.

AzureCliCredential

The AzureCliCredential authenticates in a development environment with the enabled user or service principal in Azure CLI. It uses the Azure CLI given a user that is already logged into it, and uses the CLI to authenticate the application against Azure Active Directory. Visit Azure CLI credential for more details.

azure.credential.cli.enabled=trueazure:

credential:

cli:

enabled: true[azure]

[azure.credential]

[azure.credential.cli]

enabled=trueazure {

credential {

cli {

enabled = true

}

}

}{

azure {

credential {

cli {

enabled = true

}

}

}

}{

"azure": {

"credential": {

"cli": {

"enabled": true

}

}

}

}IntelliJCredential

The IntelliJCredential authenticates in a development environment with the account in Azure Toolkit for IntelliJ. It uses the logged in user information on the IntelliJ IDE and uses it to authenticate the application against Azure Active Directory. Visit IntelliJ credential for more details.

azure.credential.intellij.enabled=trueazure:

credential:

intellij:

enabled: true[azure]

[azure.credential]

[azure.credential.intellij]

enabled=trueazure {

credential {

intellij {

enabled = true

}

}

}{

azure {

credential {

intellij {

enabled = true

}

}

}

}{

"azure": {

"credential": {

"intellij": {

"enabled": true

}

}

}

}Note, that for Windows platform the KeePass database path needs to be set by property azure.credential.intellij.kee-pass-database-path. The KeePass database path is used to read the cached credentials of Azure toolkit for IntelliJ plugin. For macOS and Linux platform native key chain / key ring will be accessed respectively to retrieve the cached credentials.

Optionally you can configure the tenant id by setting the property azure.credential.intellij.tenant-id.

VisualStudioCodeCredential

The VisualStudioCodeCredential enables authentication in development environments where VS Code is installed with the VS Code Azure Account extension. It uses the logged-in user information in the VS Code IDE and uses it to authenticate the application against Azure Active Directory. Visit Visual Studio Code credential for more details.

azure.credential.visual-studio-code.enabled=trueazure:

credential:

visual-studio-code:

enabled: true[azure]

[azure.credential]

[azure.credential.visual-studio-code]

enabled=trueazure {

credential {

visualStudioCode {

enabled = true

}

}

}{

azure {

credential {

visual-studio-code {

enabled = true

}

}

}

}{

"azure": {

"credential": {

"visual-studio-code": {

"enabled": true

}

}

}

}Optionally you can configure the tenant id by setting the property azure.credential.visual-studio-code.tenant-id.

StorageSharedKeyCredential

The StorageSharedKeyCredential is a Shared Key credential policy that is put into a header to authorize requests. It is useful when using Shared Key authorization.

Using an account name and key

azure.credential.storage-shared-key.account-name=devstoreaccount1

azure.credential.storage-shared-key.account-key=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==azure:

credential:

storage-shared-key:

account-name: devstoreaccount1

account-key: "Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw=="[azure]

[azure.credential]

[azure.credential.storage-shared-key]

account-name="devstoreaccount1"

account-key="Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw=="azure {

credential {

storageSharedKey {

accountName = "devstoreaccount1"

accountKey = "Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw=="

}

}

}{

azure {

credential {

storage-shared-key {

account-name = "devstoreaccount1"

account-key = "Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw=="

}

}

}

}{

"azure": {

"credential": {

"storage-shared-key": {

"account-name": "devstoreaccount1",

"account-key": "Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw=="

}

}

}

}From a connection string

azure.credential.storage-shared-key.connection-string=DefaultEndpointsProtocol=https;AccountName=devstoreaccount1;AccountKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==;BlobEndpoint=https://127.0.0.1:10000/devstoreaccount1;azure:

credential:

storage-shared-key:

connection-string: "DefaultEndpointsProtocol=https;AccountName=devstoreaccount1;AccountKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==;BlobEndpoint=https://127.0.0.1:10000/devstoreaccount1;"[azure]

[azure.credential]

[azure.credential.storage-shared-key]

connection-string="DefaultEndpointsProtocol=https;AccountName=devstoreaccount1;AccountKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==;BlobEndpoint=https://127.0.0.1:10000/devstoreaccount1;"azure {

credential {

storageSharedKey {

connectionString = "DefaultEndpointsProtocol=https;AccountName=devstoreaccount1;AccountKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==;BlobEndpoint=https://127.0.0.1:10000/devstoreaccount1;"

}

}

}{

azure {

credential {

storage-shared-key {

connection-string = "DefaultEndpointsProtocol=https;AccountName=devstoreaccount1;AccountKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==;BlobEndpoint=https://127.0.0.1:10000/devstoreaccount1;"

}

}

}

}{

"azure": {

"credential": {

"storage-shared-key": {

"connection-string": "DefaultEndpointsProtocol=https;AccountName=devstoreaccount1;AccountKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==;BlobEndpoint=https://127.0.0.1:10000/devstoreaccount1;"

}

}

}

}5.2 Client

The Azure SDK provides long list of management and client libraries. The example below illustrates on how to create a BlobServiceClient. The other Azure clients can be created similar way:

@Factory

public class BlobServiceFactory { // (1)

@Singleton

public BlobServiceClient blobServiceClient(@NonNull TokenCredential tokenCredential){ // (2)

return new BlobServiceClientBuilder() // (3)

.credential(tokenCredential)

.endpoint(System.getenv("AZURE_BLOB_ENDPOINT"))

.buildClient();

}

}| 1 | The class is a factory bean. |

| 2 | The method returns the singleton of BlobServiceClient class. Note the injected credential bean is of type TokenCredential. It is an interface that provides token based credential authentication. |

| 3 | The client builder is used to create the client by providing the credential bean and the blob service endpoint. Note that every client builder class like BlobServiceClientBuilder offers additional configuration options. |

6 Azure Function Support

The Azure function module provides support for writing Serverless functions with Micronaut that target the Azure Function environment.

6.1 Simple Azure Functions

There are two modules, the first of which (micronaut-azure-function) is more low level and allows you to define functions that can be dependency injected with Micronaut.

To get started follow the instructions to create an Azure Function project with Gradle or with Maven.

Then add the following dependency to the project:

implementation("io.micronaut.azure:micronaut-azure-function")<dependency>

<groupId>io.micronaut.azure</groupId>

<artifactId>micronaut-azure-function</artifactId>

</dependency>And ensure the Micronaut annotation processors are configured:

annotationProcessor("io.micronaut:micronaut-inject-java")<annotationProcessorPaths>

<path>

<groupId>io.micronaut</groupId>

<artifactId>micronaut-inject-java</artifactId>

</path>

</annotationProcessorPaths>You can then write a function that subclasses AzureFunction and it will be dependency injected when executed. For example:

package example;

import com.microsoft.azure.functions.annotation.BlobOutput;

import com.microsoft.azure.functions.annotation.BlobTrigger;

import com.microsoft.azure.functions.annotation.FunctionName;

import com.microsoft.azure.functions.annotation.StorageAccount;

import io.micronaut.azure.function.AzureFunction;

import io.micronaut.context.event.ApplicationEvent;

import io.micronaut.context.event.ApplicationEventPublisher;

import jakarta.inject.Inject;

public class BlobFunction extends AzureFunction { // (1)

@Inject

ApplicationEventPublisher eventPublisher; // (2)

@FunctionName("copy")

@StorageAccount("AzureWebJobsStorage")

@BlobOutput(name = "$return", path = "samples-output-java/{name}") // (3)

public String copy(@BlobTrigger(

name = "blob",

path = "samples-input-java/{name}") String content) {

eventPublisher.publishEvent(new BlobEvent(content)); // (4)

return content;

}

public static class BlobEvent extends ApplicationEvent {

public BlobEvent(String content) {

super(content);

}

}

}package example

import com.microsoft.azure.functions.annotation.BlobOutput

import com.microsoft.azure.functions.annotation.BlobTrigger

import com.microsoft.azure.functions.annotation.FunctionName

import com.microsoft.azure.functions.annotation.StorageAccount

import io.micronaut.azure.function.AzureFunction

import io.micronaut.context.event.ApplicationEvent

import io.micronaut.context.event.ApplicationEventPublisher

import jakarta.inject.Inject

class BlobFunction extends AzureFunction { // (1)

@Inject

ApplicationEventPublisher eventPublisher // (2)

@FunctionName("copy")

@StorageAccount("AzureWebJobsStorage")

@BlobOutput(name = '$return', path = "samples-output-java/{name}") // (3)

String copy(@BlobTrigger(

name = "blob",

path = "samples-input-java/{name}") String content) {

eventPublisher.publishEvent(new BlobEvent(content)) // (4)

return content

}

static class BlobEvent extends ApplicationEvent {

BlobEvent(String content) {

super(content)

}

}

}package example

import com.microsoft.azure.functions.annotation.BlobOutput

import com.microsoft.azure.functions.annotation.BlobTrigger

import com.microsoft.azure.functions.annotation.FunctionName

import com.microsoft.azure.functions.annotation.StorageAccount

import io.micronaut.azure.function.AzureFunction

import io.micronaut.context.event.ApplicationEvent

import io.micronaut.context.event.ApplicationEventPublisher

import jakarta.inject.Inject

class BlobFunction : AzureFunction() { // (1)

@Inject

lateinit var eventPublisher : ApplicationEventPublisher<Any> // (2)

@FunctionName("copy")

@StorageAccount("AzureWebJobsStorage")

@BlobOutput(name = "\$return", path = "samples-output-java/{name}") // (3)

fun copy(@BlobTrigger(name = "blob", path = "samples-input-java/{name}") content: String): String {

eventPublisher.publishEvent(BlobEvent(content)) // (4)

return content

}

class BlobEvent(content: String) : ApplicationEvent(content)

}| 1 | The class subclasses AzureFunction. Note that a zero argument public constructor is required |

| 2 | Use can dependency inject fields with @Inject. In this case we publish an event. |

| 3 | You can specify the function bindings as per the Azure Function API |

| 4 | Injected objects can be used in your function code |

6.2 Azure HTTP Functions

An additional module exists called micronaut-azure-function-http that allows you to write regular Micronaut controllers and have them executed using Azure Function. To get started add the micronaut-azure-function-http module.

implementation("io.micronaut.azure:micronaut-azure-function-http")<dependency>

<groupId>io.micronaut.azure</groupId>

<artifactId>micronaut-azure-function-http</artifactId>

</dependency>You then need to define a function that subclasses AzureHttpFunction and overrides the invoke method:

package example;

import com.microsoft.azure.functions.ExecutionContext;

import com.microsoft.azure.functions.HttpMethod;

import com.microsoft.azure.functions.HttpRequestMessage;

import com.microsoft.azure.functions.HttpResponseMessage;

import com.microsoft.azure.functions.annotation.AuthorizationLevel;

import com.microsoft.azure.functions.annotation.FunctionName;

import com.microsoft.azure.functions.annotation.HttpTrigger;

import io.micronaut.azure.function.http.AzureHttpFunction;

import java.util.Optional;

public class MyHttpFunction extends AzureHttpFunction { // (1)

@FunctionName("ExampleTrigger") // (2)

public HttpResponseMessage invoke(

@HttpTrigger(

name = "req",

methods = {HttpMethod.GET, HttpMethod.POST}, // (3)

route = "{*route}", // (4)

authLevel = AuthorizationLevel.ANONYMOUS) // (5)

HttpRequestMessage<Optional<String>> request, // (6)

final ExecutionContext context) {

return super.route(request, context); // (7)

}

}package example

import com.microsoft.azure.functions.ExecutionContext

import com.microsoft.azure.functions.HttpMethod

import com.microsoft.azure.functions.HttpRequestMessage

import com.microsoft.azure.functions.HttpResponseMessage

import com.microsoft.azure.functions.annotation.AuthorizationLevel

import com.microsoft.azure.functions.annotation.FunctionName

import com.microsoft.azure.functions.annotation.HttpTrigger

import io.micronaut.azure.function.http.AzureHttpFunction

class MyHttpFunction extends AzureHttpFunction { // (1)

@FunctionName("ExampleTrigger") // (2)

HttpResponseMessage invoke(

@HttpTrigger(

name = "req",

methods = [HttpMethod.GET, HttpMethod.POST], // (3)

route = "{*route}", // (4)

authLevel = AuthorizationLevel.ANONYMOUS) // (5)

HttpRequestMessage<Optional<String>> request, // (6)

final ExecutionContext context) {

return super.route(request, context) // (7)

}

}package example

import com.microsoft.azure.functions.ExecutionContext

import com.microsoft.azure.functions.HttpMethod

import com.microsoft.azure.functions.HttpRequestMessage

import com.microsoft.azure.functions.HttpResponseMessage

import com.microsoft.azure.functions.annotation.AuthorizationLevel

import com.microsoft.azure.functions.annotation.FunctionName

import com.microsoft.azure.functions.annotation.HttpTrigger

import io.micronaut.azure.function.http.AzureHttpFunction

import java.util.Optional

class MyHttpFunction : AzureHttpFunction() { // (1)

@FunctionName("ExampleTrigger") // (2)

fun invoke(

@HttpTrigger(name = "req",

methods = [HttpMethod.GET, HttpMethod.POST], // (3)

route = "{*route}", // (4)

authLevel = AuthorizationLevel.ANONYMOUS) // (5)

request: HttpRequestMessage<Optional<String>>, // (6)

context: ExecutionContext): HttpResponseMessage {

return super.route(request, context) // (7)

}

}| 1 | The function class subclasses AzureHttpFunction and includes a zero argument constructor. |

| 2 | The function name can be whatever you prefer |

| 3 | You can choose to handle ony specific HTTP methods |

| 4 | In general you want a catch all route as in the example, but you can customize it. |

| 5 | The auth level specifies who can access the function. Using ANONYMOUS allows everyone. |

| 6 | The received request optionally contains the raw bytes |

| 7 | The body of the method should just invoke the route method of the super implementation. |

With this in place you can write regular Micronaut controllers as documented in the user guide for the HTTP server and incoming function requests will be routed to the controllers and executed.

This approach allows you to develop a regular Micronaut application and deploy slices of the application as Serverless functions as desired.

| See the guide for Micronaut Azure HTTP Functions to learn more. |

6.2.1 Function Route Prefix

When you use Micronaut Azure HTTP Functions, you need to remove the api route prefix. You can set the property micronaut.server.context-path to achieve a route prefix.

|

By default, all function routes are prefixed with api. You can also customize or remove the prefix using the extensions.http.routePrefix property in your host.json file. The following example removes the api route prefix by using an empty string for the prefix in the host.json file.

For example, by defining a hosts.json file such as:

{

"version": "2.0",

"extensionBundle": {

"id": "Microsoft.Azure.Functions.ExtensionBundle",

"version": "[2.*, 3.0.0)"

},

"extensions": {

"http": {

"routePrefix": ""

}

}

}Micronaut CLI and [Micronaut Launch generate a hosts.json with the necessary configuration if you select the azure-function feature.

6.2.2 Access the ExecutionContext

You can bind the following types as a controller method’s parameters.

-

com.microsoft.azure.functions.ExecutionContext -

com.microsoft.azure.functions.HttpRequestMessage -

com.microsoft.azure.functions.TraceContext -

java.util.logging.Logger

7 Azure Key Vault Support

Azure Key Vault is a secure and convenient storage system for API keys, passwords and other sensitive data. To add support for Azure Key Vault to an existing project, add the following dependencies to your build.

implementation("io.micronaut.azure:micronaut-azure-secret-manager")<dependency>

<groupId>io.micronaut.azure</groupId>

<artifactId>micronaut-azure-secret-manager</artifactId>

</dependency>

implementation("io.micronaut.discovery:micronaut-discovery-client")<dependency>

<groupId>io.micronaut.discovery</groupId>

<artifactId>micronaut-discovery-client</artifactId>

</dependency>| Azure doesn’t allow _ and . in name of the secrets so the secret with name SECRET-ONE can be resolved also with SECRET_ONE and SECRET.ONE |

| See the guide for Securely store Micronaut application secrets in Azure Key Vault to learn more. |

7.1 Distributed Configuration

You can leverage Distributed Configuration and rely on Azure Key Vault to store your Key/Value secret pairs.

To enable it, add a bootstrap configuration file to src/main/resources/bootstrap:

micronaut.application.name=hello-world

micronaut.config-client.enabled=true

azure.key-vault.vault-url=<key_vault_url>micronaut:

application:

name: hello-world

config-client:

enabled: true

azure:

key-vault:

vault-url: <key_vault_url>[micronaut]

[micronaut.application]

name="hello-world"

[micronaut.config-client]

enabled=true

[azure]

[azure.key-vault]

vault-url="<key_vault_url>"micronaut {

application {

name = "hello-world"

}

configClient {

enabled = true

}

}

azure {

keyVault {

vaultUrl = "<key_vault_url>"

}

}{

micronaut {

application {

name = "hello-world"

}

config-client {

enabled = true

}

}

azure {

key-vault {

vault-url = "<key_vault_url>"

}

}

}{

"micronaut": {

"application": {

"name": "hello-world"

},

"config-client": {

"enabled": true

}

},

"azure": {

"key-vault": {

"vault-url": "<key_vault_url>"

}

}

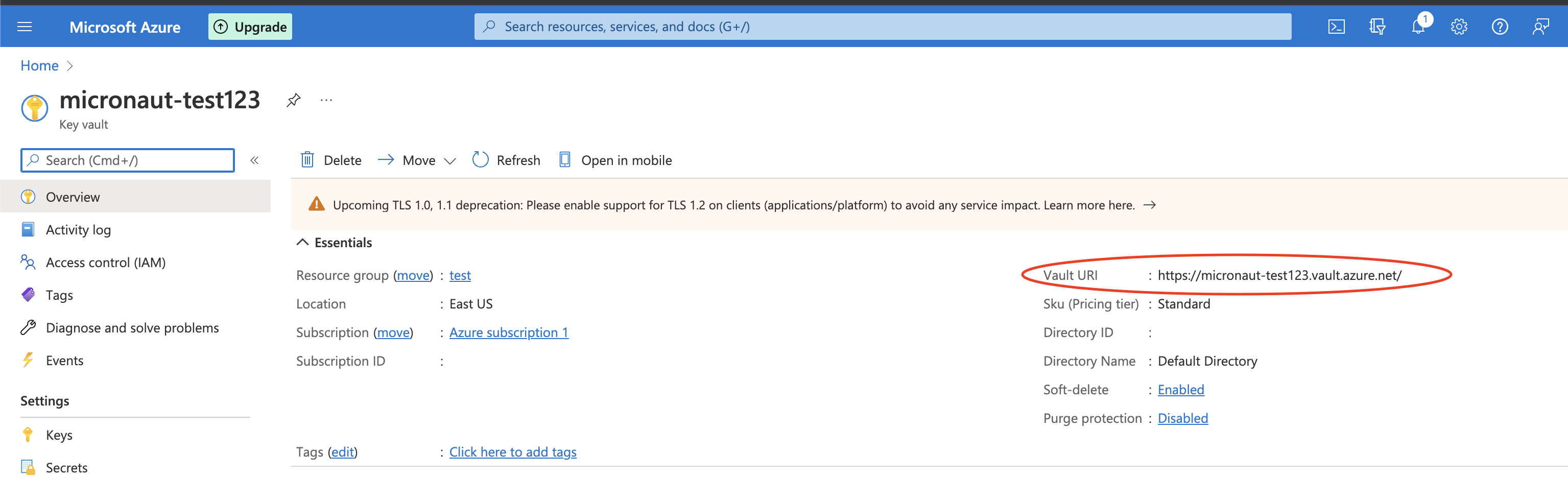

}vaultUrl can be obtained from the Azure portal:

| Make sure you have configured the correct credentials on your project following the Setting up Azure SDK section. And that the service account you designated your application has proper rights to read secrets. Follow the official Access Control guide for Azure Key Vault if you need more information. |

8 Azure Blob Storage Support

Micronaut provides a high-level, uniform object storage API that works across the major cloud providers: Micronaut Object Storage.

To get started, select the object-storage-azure feature in Micronaut Launch, or add the following dependency:

implementation("io.micronaut.objectstorage:micronaut-object-storage-azure")<dependency>

<groupId>io.micronaut.objectstorage</groupId>

<artifactId>micronaut-object-storage-azure</artifactId>

</dependency>For more information, check the Micronaut Object Storage Azure support documentation.

9 Azure Cosmos Client

Azure Cosmos DB is a fully managed NoSQL database for modern app development. Single-digit millisecond response times, and automatic and instant scalability, guarantee speed at any scale.

To add support for Azure Cosmos DB to an existing project, add the following dependency to your build.

implementation("io.micronaut.azure:micronaut-azure-cosmos")<dependency>

<groupId>io.micronaut.azure</groupId>

<artifactId>micronaut-azure-cosmos</artifactId>

</dependency>9.1 Configuration

This is an example configuration that can be used when creating CosmosClient or CosmosAsyncClient:

micronaut.application.name=azure-cosmos-demo

azure.cosmos.consistency-level=SESSION

azure.cosmos.endpoint=<endpoint-from-connectionstring>

azure.cosmos.key=<key-from-connectionstring>

azure.cosmos.default-gateway-mode=true

azure.cosmos.endpoint-discovery-enabled=falsemicronaut:

application:

name: azure-cosmos-demo

azure:

cosmos:

consistency-level: SESSION

endpoint: <endpoint-from-connectionstring>

key: <key-from-connectionstring>

default-gateway-mode: true

endpoint-discovery-enabled: false[micronaut]

[micronaut.application]

name="azure-cosmos-demo"

[azure]

[azure.cosmos]

consistency-level="SESSION"

endpoint="<endpoint-from-connectionstring>"

key="<key-from-connectionstring>"

default-gateway-mode=true

endpoint-discovery-enabled=falsemicronaut {

application {

name = "azure-cosmos-demo"

}

}

azure {

cosmos {

consistencyLevel = "SESSION"

endpoint = "<endpoint-from-connectionstring>"

key = "<key-from-connectionstring>"

defaultGatewayMode = true

endpointDiscoveryEnabled = false

}

}{

micronaut {

application {

name = "azure-cosmos-demo"

}

}

azure {

cosmos {

consistency-level = "SESSION"

endpoint = "<endpoint-from-connectionstring>"

key = "<key-from-connectionstring>"

default-gateway-mode = true

endpoint-discovery-enabled = false

}

}

}{

"micronaut": {

"application": {

"name": "azure-cosmos-demo"

}

},

"azure": {

"cosmos": {

"consistency-level": "SESSION",

"endpoint": "<endpoint-from-connectionstring>",

"key": "<key-from-connectionstring>",

"default-gateway-mode": true,

"endpoint-discovery-enabled": false

}

}

}CosmosClientBuilder will be available also when dependencies and configuration are added to the project.

10 Azure Logging

To use Azure Monitor Logs, add the following dependency to your project:

implementation("io.micronaut.azure:micronaut-azure-logging")<dependency>

<groupId>io.micronaut.azure</groupId>

<artifactId>micronaut-azure-logging</artifactId>

</dependency>10.1 Create Azure Logging Resources

Refer to the Micronaut Azure Logging guide for information about creating the required Azure resources.

10.2 Application configuration

There are three application configuration properties required to configure the Logback appender that pushes Logback log events to Azure Monitor Logs. In addition, you can enable or disable the appender, which defaults to being enabled.

| Property | Type | Required | Default value | Description |

|---|---|---|---|---|

|

|

|

|

Whether the Logback appender is enabled |

|

|

|

none |

Azure Monitor data collection endpoint URL |

|

|

|

none |

The Azure Monitor data collection rule id that is configured to collect and transform the logs |

|

|

|

none |

The Azure Monitor stream name configured in the data collection rule, for example a table in a Log Analytics workspace |

10.3 Logback Configuration

Edit your src/main/resources/logback.xml file to look like this:

<configuration>

<appender name='AZURE' class='io.micronaut.azure.logging.AzureAppender'>

<!-- <blackListLoggerName>example.app.Application</blackListLoggerName> -->

<encoder class='ch.qos.logback.core.encoder.LayoutWrappingEncoder'>

<layout class='ch.qos.logback.contrib.json.classic.JsonLayout'>

<jsonFormatter class='io.micronaut.azure.logging.AzureJsonFormatter' />

</layout>

</encoder>

</appender>

<root level='INFO'>

<appender-ref ref='AZURE' />

</root>

</configuration>You can customize your JsonLayout with additional parameters that are described in the Logback JsonLayout documentation.

The AzureAppender supports blacklisting loggers by specifying the logger name(s) to exclude in blackListLoggerName elements.

| Property | Type | Required | Default value | Description |

|---|---|---|---|---|

|

|

false |

application-name |

the subject of the log |

|

|

false |

host-name |

the source of the log |

|

|

false |

100 |

Time in ms between two batch publishing of logs |

|

|

false |

128 |

The maximum number of log events that will be sent in one batch request |

|

|

false |

128 |

The size of publishing log queue |

|

|

false |

none |

Logger name(s) that will be excluded |

10.4 Emergency Appender

Since the Azure appender queues log messages and then writes them remotely, there are situations which might result in log events not getting remoted correctly. To address such scenarios you can configure the emergency appender to preserve those messages.

Configure an appender element in your src/main/resources/logback.xml; in the example it is STDOUT, but any valid Logback appender can be used.

Inside the AzureAppender element, add an appender-ref element that references the emergency appender.

<configuration>

<appender name='STDOUT' class='ch.qos.logback.core.ConsoleAppender'>

<encoder>

<pattern>%cyan(%d{HH:mm:ss.SSS}) %gray([%thread]) %highlight(%-5level) %magenta(%logger{36}) - %msg%n</pattern>

</encoder>

</appender>

<appender name='AZURE' class='io.micronaut.azure.logging.AzureAppender'>

<appender-ref ref='STDOUT'/>

<blackListLoggerName>org.apache.http.impl.conn.PoolingHttpClientConnectionManager</blackListLoggerName>

<encoder class='ch.qos.logback.core.encoder.LayoutWrappingEncoder'>

<layout class='ch.qos.logback.contrib.json.classic.JsonLayout'>

<jsonFormatter class='io.micronaut.azure.logging.AzureJsonFormatter' />

</layout>

</encoder>

</appender>

<root level='INFO'>

<appender-ref ref='AZURE' />

</root>

</configuration>10.5 Browsing the logs

Refer to the Micronaut Azure Logging guide for how to retrieve log entries published to Azure Monitor Logs.

If you have any troubles with configuring the Azure Appender you can try to add <configuration debug='false'> into your Logback configuration.

11 Guides

See the following list of guides to learn more about working with Microsoft Azure in the Micronaut Framework:

12 Repository

You can find the source code of this project in this repository: